PythonPro #10: ⛄5 Features in Django 5, Python 3.12.1 Enhancements, PyPI's 2FA, and Real World Deep Learning🎄

Bite-sized actionable content, practical tutorials, news, and expert insights for Python developers and data scientists

“I had a two-week Christmas holiday with nothing to do, and needed a project that I could do on my Mac without having to log into CWI's computers. That was when the first bits of the Python interpreter were written.”

— Interview with Guido van Rossum (1998)

Welcome to a brand new issue of PythonPro! What are your plans for this year’s Christmas break?

Before I get into today’s issue, I just want to let you know that we are taking a two week break for the holidays🎄🌟. We will be back with our first issue for 2024 on the 🔔 3rd of January🌠.

Highlights: Django 5 has introduced transformative features like streamlined form rendering, enhanced model fields, and a new GeneratedField type. Python 3.12.1, the first maintenance release, is here with over 400 bug fixes and a notable 5% performance increase. Also, 2FA will be mandatory for PyPI from January 2024.

We're wrapping up the year with a strong bunch of learning resources and here are my top 5 picks:

Building Robust Real-Time Data Pipelines With Python, Apache Kafka, and the Cloud☁️

Boosting Python development speed with Ruff: An all-in-one lightning fast linter🚀

Finally, in today’s Expert Insight section we have an exclusive excerpt from the book Python Deep Learning - Third Edition, that takes you through the diverse real-world applications of Deep Learning (DL) in various sectors like automotive safety, medical imaging, and digital assistants, and introduces the Keras library. So dive right in!

Thank you dear readers for making it to issue 10 with me.

May your holiday season be as joyful and bug-free as a perfectly written Python script🎄.

Don’t forget to stay awesome! 🌟

Divya Anne Selvaraj

Editor-in-Chief

🐍 Python in the Tech 💻 Jungle 🌳

🗞️News

5 great new features in Django 5: The new version brings features such as streamlined form rendering and enhanced model fields, including a new GeneratedField type. Read to learn how the update works for you through enhancements like expanded support for async views and more.

Python 3.12.1 maintenance release: The first maintenance release brings 400+ bug fixes and notable features like flexible f-string parsing and improved performance. Deprecations include removals of wstr, unittest elements, and deprecated modules. Read to learn more and discover a 5% overall performance boost, with specifics like PEP 709 and BOLT binary optimizer support.

2FA Requirement for PyPI from 1st January, 2024: PyPI Admins will activate the requirement for PyPI.org on Jan 1, 2024 for all users and all projects. Read if you are responsible for a PyPI account that publishes packages.

💼Case Studies and Experiments🔬

Facebook Network Analysis: This case study on the Facebook network uses NetworkX to analyze ten friends lists for insights into network structure and centrality measures. Read for valuable insights into social network analysis and to discover practical knowledge on structure, centrality measures, and dynamics.

📊Analysis

Parallel Python within the same process or hacking around the cursed GIL with a hand-rolled library loader: This article tackles Python's GIL hindering parallelism in databases like DuckDB, proposing a unique solution—a hand-rolled library loader. Read to discover a proof-of-concept extension for DuckDB showing substantial performance gains in parallel Python execution.

How Many Lines of C it Takes to Execute a + b in Python?: This article delves into CPython internals and explores the PyTypeObject structure, the abstract object interface, and the CPython VM's role. Read to discover how types implement operators, populate function tables, and perform dynamic dispatch.

🎓 Tutorials and Guides 🤓

Serialize Your Data With Python: This tutorial covers various formats such as textual vs. binary, schemaless vs. schema-based, and general-purpose vs. specialized. Read to learn how to serialize Python objects, using formats foreign to Python, send executable code over the network, gain a deep understanding of data interchange formats, and master the ability to persist and transfer stateful objects.

Building Robust Real-Time Data Pipelines With Python, Apache Kafka, and the Cloud: This comprehensive guide covers Kafka architecture, cloud deployment, Python pipelines, PySpark scaling, and practical examples. Read to enhance your real-time data processing skills.

Build a Hangman Game With Python and PySimpleGUI: This tutorial covers setting up the project, sketching the GUI, coding the game's logic, and handling results. Read to enhance your Python skills with PySimpleGUI and delve into GUI-based game development.

How to Deploy a Python Flask app with Heroku: This tutorial covers creating a basic Flask project, setting up GitHub and Heroku, and configuring the app for production. Read for insights into Heroku's user-friendly interface and extensive add-ons, and Flask's minimalism and flexibility.

Serverless AI Inferencing Using Python: This article covers the entire process of using Fermyon Serverless AI which is in private beta, starting with Spin installation and ending with application configuration. Read for code examples that facilitate the creation of sentiment analysis applications, and deployment to Fermyon Cloud streamlined with Spin commands.

Geometric Generator Models: This article explores geometric network generator models in networkx, dissecting models like Random Geometric Graphs and Waxman Graphs with practical insights using the Tesla Supercharger network as an example. Read for practical guidance in spatial network modeling.

How to Build an Automated Email System for Job Applications with Python: This detailed step by step guide takes you through setting up an email server connection using the smtplib package, adding various content types using MIME, and sending personalized emails to multiple recipients using a CSV file. Read for comprehensive code snippets and to set up your own job application automation.

🔑 Best Practices and Code Optimization 🔏

Boosting Python development speed with Ruff: An all-in-one lightning fast linter: This article unveils Ruff, a Rust-based Python linter offering remarkable speed, up to 150 times faster than Flake8. Read to learn how to integrate Ruff into your project and discover over 700 rules that make it a potent replacement for Flake8 and result in accelerated development workflows.

Python Hinting and Linting in Visual Studio Code: This article explores Python linting in Visual Studio Code, highlighting the significance of code readability and offering practical tips for efficient development. Read to learn how to optimize your coding environment by following detailed screen recordings and explanations.

Django: Defer a model field by default: This article delves into Django's field deferring to optimize queries with large fields like blog post bodies. Read to learn how to implement default field deferring and overcome limitations for improved database performance.

Code injection in Python — examples and prevention: This article delves into common vulnerabilities like user-controlled inputs, insecure use of eval(), lack of validation, dynamic code construction, and insecure deserialization. Read to learn about mitigation strategies, such as safeguarding user inputs, secure use of eval(), input validation, and strong access controls.

How to Set Up GitHub OAuth in a Django App for User Authentication: This article guides Django developers in implementing GitHub OAuth for enhanced user authentication and personalized experiences. Read for a step-by-step guide to improve security and ensure seamless GitHub integration in Django projects.

🧠 Expert insight 📚

Here’s an excerpt from “Chapter 3, Deep Learning Fundamentals” in the book Python Deep Learning - Third Edition by Ivan Vasilev published in November 2023.

Applications of Deep Learning

ML in general, and DL in particular, is producing more and more astonishing results in terms of the quality of predictions, feature detection, and classification. Many of these recent results have made the news. Such is the pace of progress that

some experts are worrying that machines will soon be more intelligent than humans. But I hope that any such fears you might have will be alleviated after you have read this book. For better or worse, we’re still far from machines having human-level intelligence.

In Chapter 2, we mentioned how DL algorithms have occupied the leaderboard of the ImageNet competition. These algorithms have also been successful enough to make the jump from academia to industry.

Let’s talk about some real-world use cases of DL:

Nowadays, new cars have a suite of safety and convenience features that aim to make the driving experience safer and less stressful. One such feature is automated emergency braking if the car sees an obstacle. Another one is lane-keeping assist, which allows the vehicle to stay in its current lane without the driver needing to make corrections with the steering wheel. To recognize lane markings, other vehicles, pedestrians, and cyclists, these systems use a forward-facing camera.

One of the most prominent suppliers of such systems, Mobileye, has produced custom chips that use CNNs to detect these objects on the road ahead. To give you an idea of the importance of this sector, in 2017, Intel acquired Mobileye for $15.3 billion. This is not an outlier, and Tesla’s famous Autopilot system also relies on CNNs to achieve the same results. The former director of AI at Tesla, Andrej Karpathy, is a well-known researcher in the field of DL. We can speculate that future autonomous vehicles will also use deep networks for computer vision.

Both Google’s Vision API and Amazon’s Rekognition services use DL models to provide various computer vision capabilities. These include recognizing and detecting objects and scenes in images, text recognition, face recognition, content moderation, and so on.

If these APIs are not enough, you can run your own models in the cloud. For example, you can use Amazon’s AWS DL Amazon Machine Images (AMIs), which are virtual machines that come configured with some of the most popular DL libraries. Google offers a similar service with their Cloud AI, but they’ve gone one step further. They created tensor processing units (TPUs) – microprocessors that are optimized for fast NN operations such as matrix multiplication and activation functions.

DL has a lot of potential for medical applications. However, strict regulatory requirements, as well as patient data confidentiality, have slowed down its adoption. Nevertheless, there are several areas in which DL could have a high impact:

Medical imaging is an umbrella term for various non-invasive methods of creating visual representations of the inside of the body. Some of these include magnetic resonance images (MRIs), ultrasound, computed axial tomography (CAT) scans, X-rays, and histology images. Typically, such an image is analyzed by a medical professional to determine the patient’s condition.

Computer-aided diagnosis, and computer vision, in particular, can help specialists by detecting and highlighting important features of images. For example, to determine the degree of malignancy of colon cancer, a pathologist would have to analyze the morphology of the glands using histology imaging. This is a challenging task because morphology can vary greatly. A DNN could segment the glands from the image automatically, leaving the pathologist to verify the results. This would reduce the time needed for analysis, making it cheaper and more accessible.

Another medical area that could benefit from DL is the analysis of medical history records. When a doctor diagnoses a patient’s condition and prescribes treatment, they consult the patient’s medical history first. A DL algorithm could extract the most relevant and important information from those records, even if they are handwritten. In this way, the doctor’s job would be made easier, and the risk of errors would also be reduced.

One area where DNNs have already had an impact is in protein folding. Proteins are large, complex molecules, whose function depends on their 3D shape. The building blocks of proteins are amino acids, and their sequence determines the shape of the protein. The protein folding problem seeks to understand the relationship between the initial amino acid sequence and the final 3D shape of the protein. DeepMind’s AlphaFold 2 model (believed to be based on transformers) has managed to predict 200 million protein structures, which represents almost all known cataloged proteins.

Google’s Neural Machine Translation API uses – you guessed it – DNNs for machine translation.

Siri, Google Assistant, and Amazon Alexa rely on deep networks for speech recognition.

AlphaGo is an AI machine based on DL that made the news in 2016 by beating the world Go champion, Lee Sedol. AlphaGo had already made the news, in January 2016, when it beat the European champion, Fan Hui. At the time, however, it seemed unlikely that it could go on to beat the world champion. Fast-forward a couple of months and AlphaGo was able to achieve this remarkable feat by sweeping its opponent in a 4-1 victory series.

This was an important milestone because Go has many more possible game variations than other games, such as chess, and it’s impossible to consider every possible move in advance. Also, unlike chess, in Go, it’s very difficult to even judge the current position or value of a single stone on the board. In 2017, DeepMind released an updated version of AlphaGo called AlphaZero, and in 2019, they released a further update called MuZero.

Tools such as GitHub Copilot and ChatGPT utilize generative DNN models to transform natural language requests into source code snippets, functions, and entire programs. We already mentioned Stable Diffusion and DALL-E, which can generate realistic images based on text description.

With this short list, we have aimed to cover the main areas in which DL is applied, such as computer vision, NLP, speech recognition, and reinforcement learning (RL). This list is not exhaustive, however, as there are many other uses for DL algorithms. Still, I hope this has been enough to spark your interest. Next, we’ll formally introduce two of the most popular DL libraries – PyTorch and Keras.

Introducing popular DL libraries

We already implemented a simple example with PyTorch in Chapter 1. In this section, we’ll introduce this library, and Keras, more systemically. Let’s start with the common features of most DNN libraries:

All libraries use Python.

The basic unit for data storage is the tensor. Mathematically, the definition of a tensor is more complex, but in the context of DL libraries, they are multi-dimensional (with an arbitrary number of axes) arrays of base values.

NNs are represented as a computational graph of operations. The nodes of the graph represent the operations (weighted sum, activation function, and so on). The edges represent the flow of data, which is how the output of one operation serves as an input for the next one. The inputs and outputs of the operations (including the network inputs and outputs) are tensors.

All libraries include automatic differentiation. This means that all you need to do is define the network architecture and activation functions, and the library will automatically figure out all of the derivatives required for training with backpropagation.

So far, we’ve referred to GPUs in general, but in reality, the vast majority of DL projects work exclusively with NVIDIA GPUs. This is because of the better software support NVIDIA provides. These libraries are no exception – to implement GPU operations, they rely on the CUDA Toolkit in combination with the cuDNN library. cuDNN is an extension of CUDA, built specifically for DL applications. As mentioned in the Applications of DL section, you can also run your DL experiments in the cloud.

PyTorch is an independent library, while Keras is built on top of TF and acts as a user-friendly TF interface. We’ll continue by implementing a simple classification example using both PyTorch and Keras.

Classifying digits with Keras

Keras exists either as a standalone library with TF as the backend or as a sub-component of TF itself. You can use it in both flavors. To use Keras as part of TF, we need only to install TF itself. Once we’ve done this, we can use the library with the following import:

import tensorflow.keras

The standalone Keras supports different backends besides TF, such as Theano. In this case, we can install Keras itself and then use it with the following import:

import keras

The large majority of Keras’s use is with the TF backend. The author of Keras recommends using the library as a TF component (the first option) and we’ll do so in the rest of this book.

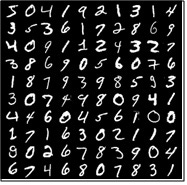

In this section, we’ll use Keras via TF to classify the images of the MNIST dataset. It’s comprised of 70,000 examples of digits that have been handwritten by different people. The first 60,000 are typically used for training and the remaining 10,000 for testing:

Figure 3.11 – A sample of digits taken from the MNIST dataset

We’ll build a simple MLP with one hidden layer. Let’s start:

One of the advantages of Keras is that it can import this dataset for you without you needing to explicitly download it from the web (it will download it for you):

import tensorflow as tf

(X_train, Y_train), (X_validation, Y_validation) = \

tf.keras.datasets.mnist.load_data()

Here, (X_train, Y_train) is the training images and labels, and (X_validation, Y_validation) is the test images and labels.

We need to modify the data so that we can feed it to the NN. X_train contains 60,000 28×28 pixel images, and X_validation contains 10,000. To feed them to the network as inputs, we want to reshape each sample as a 784-pixel-long array, rather than a 28×28 two-dimensional matrix. We’ll also normalize them in the [0:1] range. We can accomplish this with these two lines:

X_train = X_train.reshape(60000, 784) / 255X_validation = X_validation.reshape(10000, 784) / 255

The labels indicate the value of the digit depicted in the images. We want to convert this into a 10-entry one-hot-encoded vector comprised of 0s and just one 1 in the entry corresponding to the digit. For example, 4 is mapped to [0, 0, 0, 0, 1, 0, 0, 0, 0, 0]. Conversely, our network will have 10 output units:

classes = 10

Y_train = tf.keras.utils.to_categorical(Y_train,

classes)

Y_validation = tf.keras.utils.to_categorical(

Y_validation, classes)

Define the NN. In this case, we’ll use the Sequential model, where each layer serves as an input to the next. In Keras, Dense means a fully connected layer. We’ll use a network with one hidden layer with 100 units, BN, ReLU activation, and softmax output:

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense, BatchNormalization, Activation

input_size = 784

hidden_units = 100

model = Sequential([

Dense(

hidden_units, input_dim=input_size),

BatchNormalization(),

Activation('relu'),

Dense(classes),

Activation('softmax')

])

Now, we can define our gradient descent parameters. We’ll use the Adam optimizer and categorical cross-entropy loss (this is cross entropy, optimized for softmax outputs):

model.compile(

loss='categorical_crossentropy',

metrics=['accuracy'],

optimizer='adam')

Next, run the training for 100 epochs and a batch size of 100. In Keras, we can do this with the fit method, which iterates over the dataset internally. Keras will default to GPU training, but if a GPU is not available, it will fall back to the CPU:

model.fit(X_train, Y_train, batch_size=100, epochs=20,

verbose=1)

All that’s left to do is add code to evaluate the network’s accuracy on the test data:

score = model.evaluate(X_validation, Y_validation,

verbose=1)

print('Validation accuracy:', score[1])

And that’s it. The validation accuracy will be about 97.7%, which is not a great result, but this example runs in less than 30 seconds on a CPU. We can make some simple improvements, such as a larger number of hidden units, or a higher number of epochs. We’ll leave those experiments to you so that you can familiarize yourself with the code.

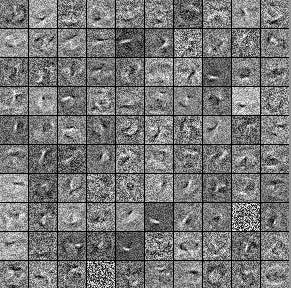

To see what the network has learned, we can visualize the weights of the hidden layer. The following code allows us to obtain them:

weights = model.layers[0].get_weights()

Reshape the weights for each unit back to a 28×28 two-dimensional array and then display them:

import matplotlib.pyplot as plt

import matplotlib.cm as cm

import numpy

fig = plt.figure()

w = weights[0].T

for unit in range(hidden_units):

ax = fig.add_subplot(10, 10, unit + 1)

ax.axis("off")

ax.imshow(numpy.reshape(w[unit], (28, 28)),

cmap=cm.Greys_r)

plt.show()

We can see the result in the following figure:

Figure 3.12 – A composite figure of what was learned by all the hidden units

Now, let us see the example for PyTorch…

Packt subscribers can continue reading the chapter for free here. You can buy the book, Python Deep Learning - Third Edition by Ivan Vasilev here or just buy Chapter 3 here.

And that’s a wrap.

We have an entire range of newsletters with focused content for tech pros. Subscribe to the ones you find the most useful here. The complete PythonPro archives can be found here.

See you next on the 3rd of January!