PythonPro #15: Python 3.12.2 Updates, CUDA Basics, Decision Tree Classifiers, Image Embeddings, and Django Testing Tips

Bite-sized actionable content, practical tutorials, and resources for Python Programmers and Data Scientists

Welcome to a brand new issue of PythonPro!

In today’s Expert Insight we bring you an exclusive excerpt from the recently published book, Machine Learning Infrastructure and Best Practices for Software Engineers, that will teach you how to train, test, and evaluate decision tree classifiers for machine learning, highlighting the the importance of explainability in understanding model decisions and data patterns.

News Highlights: Python 3.12.2 introduces maintenance improvements and new features like enhanced f-strings, with a 5% performance increase, while Numba 0.59.0 supports Python 3.12, offering improved JIT compilation for Python and NumPy code.

Here are my top 5 picks from our learning resources today:

Dive in, and let me know what you think about this issue in today’s survey!

Stay awesome!

Divya Anne Selvaraj

Editor-in-Chief

P.S.: If you have any food for thought, feedback, or would like us to find you a Python learning resource on a particular subject for our next issue, take the survey!

We love taking requests. Any resource preceded by “👨💻” aligns with what our readers have asked for.

🐍 Python in the Tech 💻 Jungle 🌳

🗞️News

Python 3.12.2 released: This is a maintenance update to Python 3.12 featuring over 350 bug fixes, build improvements, and documentation updates, alongside major features like more flexible f-strings, buffer protocol support, a new debugging API, isolated subinterpreters, enhanced error messages, and performance boosts estimated at 5%. Read to learn more.

Numba now supports Python 3.12: Version 0.59.0 is a major release of the open-source Just-In-Time (JIT) compiler that translates a subset of Python and NumPy code into fast machine code. Read to learn about the latest enhancements in Numba that improve its performance and compatibility, specifically with Python 3.12.

💼Case Studies and Experiments🔬

Accelerating materials research with a comprehensive data management tool - a case study on an electrochemical laboratory: This study demonstrates a tool for materials research, focusing on electrochemistry, to streamline the entire data lifecycle from acquisition through analysis to publication. Read to understand the importance of structured, FAIR-compliant data, and gain insights into leveraging SQL databases and Python for data handling and analysis.

👨💻GDAL and PROJ Libraries Integrated with GRASS GIS for Terrain Modelling of Georeferenced Raster Images: This study integrates GDAL, PROJ, and GRASS GIS for advanced geospatial data processing in topographic analysis, emphasizing scripting methods for terrain modeling. Read for insights on enhancing the accuracy and functionality of topographic mapping.

📝Listicles

10 Specialized Python libraries for Unique Tasks: From front-end and back-end development with Taipy to handling PDFs with PyPDF2, and managing dates and times with Arrow, these libraries cater to unique programming needs. Read to discover Python's broad utility beyond its mainstream applications.

10 Python datetime pitfalls, and what libraries are (not) doing about it: This article critiques the Python datetime library for its confusing design and numerous pitfalls, such as handling of naive vs. aware datetimes, DST issues, and inconsistent behavior and proposes a new library. Read to gain insights into the complexities and limitations of datetime handling in Python.

📊Analysis

👨💻Network Management and Automation with Python: This paper highlights the use of Python-based tools (Nornir, Scrapli, and FastAPI) in network automation, showcasing their effectiveness in simplifying network device management, configuration, and operations. Read to learn about the advantages of integrating network automation tools and technologies to optimize management practices.

👨💻Deep Learning-based Embedded Intrusion Detection System for Automotive CAN: This paper describes the implementation of a deep-CNN model for intrusion detection on automotive CAN networks using FPGA-based ECU architecture. Python frameworks like TensorFlow were used for defining the model along with the Vitis AI Runtime (VART) APIs. Read to learn more.

🎓 Tutorials and Guides 🤓

🎥Getting Started With CUDA for Python Programmers: This video tutorial simplifies the complexities of CUDA programming and demonstrates its accessibility when combined with PyTorch. Watch to learn how to harness the power of GPUs for enhanced performance in your projects.

👨💻Generating image embeddings on a GPU with LLaVA and llama-cpp-python: This article details experiments on image-to-image and query-to-image similarity. Read to learn about the practical applications of image embeddings in tasks such as semantic image search, automatic tagging, and the potential for real-time processing on consumer GPUs.

👨💻Machine Learning Model Deployment-A Beginner’s Guide: This article covers best practices and tools, and provides a detailed tutorial on deploying ML models using frameworks like Python, Flask, Django, and Streamlit, emphasizing the importance of making models accessible for real-world applications. Read to learn how to deploy ML models effectively.

How to cache data using GDB's Python API: This guide for enhancing the performance and efficiency of custom extensions, highlights methods for caching within gdb.Objfile and gdb.Progspace object types using built-in dictionaries for user-defined attributes. Read for insights into effective data management techniques for developing complex GDB Python extensions.

Python Setup for macOS: This guide details setting up Python on macOS using modern tools like pyenv and poetry instead of traditional methods. Read to learn how to modernize your Python development setup on macOS to ensure you use the latest Python versions and manage dependencies more effectively.

Google Trends API with Python (PyTrends simple alternative): This article introduces SerpApi as a superior alternative to PyTrends for working with Google Trends data using Python. Read for a guide through setting up SerpApi, writing Python code to scrape Google Trends data, and adjusting search parameters.

👨💻Deploying Python Applications in Azure - A Guide to Containerization and Best Practices: This article details the process of deploying Python projects as Docker containers in Azure, covering containerization, deployment options, and Python-specific configurations for web frameworks like Django and Flask. Read to explore various development and deployment scenarios and best practices.

👨💻Create applications with QtQuick: This comprehensive tutorial guides you through building applications with Qt Quick, using PySide6 and the Qt Modeling Language (QML) to create dynamic, mobile-focused UIs. Read to learn how to employ advanced features like animations, transformations, and seamless Python-QML signal communication for real-time UI updates.

👨💻The Hardware Engineer’s Guide to Running Python Tests in Linux Mode on ADP3450: This article demystifies using Linux and Python for hardware testing, providing a step-by-step approach for engineers unfamiliar with firmware or software-focused workflows. Read to enhance your skills in integrating software with hardware testing.

🔑 Best Practices and Code Optimization 🔏

Abandoned Code - The Hidden Risks of Using Unmaintained Software: Risks discussed include security vulnerabilities, compatibility issues, and the burden of finding or creating alternatives. Read to understand the the importance of verifying the current status of dependencies to ensure the security of your projects.

Syntax Error #11 - Debugging Python: This article focuses on non-technical aspects, tool usage, avoiding panic, adopting a step-by-step process, and utilizing specific Python tools and debuggers like snoop and birdseye. Read to enhance your debugging efficiency.

Improving Django testing with seed database: Seed databases store initial data sets, enhancing testing and deployment processes by reducing migration time. Read to learn how to implement seed databases in Django projects to expedite CI/CD pipelines, improve testing workflows, and maintain efficient development practices.

21 Django Cheat Sheets: This compilation of cheat sheets covers various aspects of Django framework development, including models, class-based views, basic project setup, and specific features like Djaneiro. Read to enhance your efficiency and understanding of the Django framework.

Modern Good Practices for Python Development: This article outlines modern best practices for Python development, emphasizing the use of the latest Python version, structured project setup with pyproject.toml, the src layout, virtual environments, and secure package management. Read to learn how to ensure code quality and maintainability by leveraging the latest Python features.

Take the Survey, Make a resource request!

🧠 Expert insight 📚

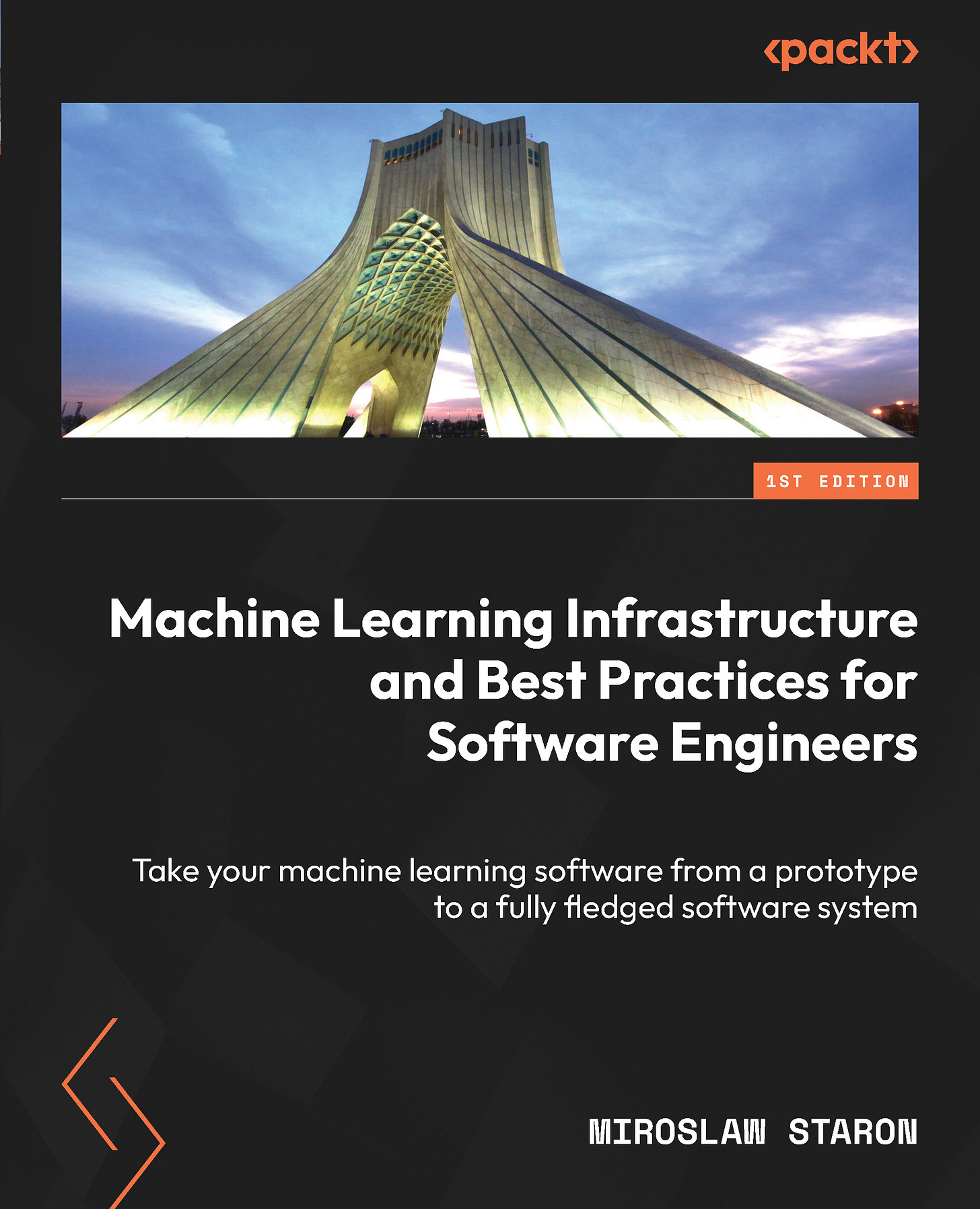

Here’s an exclusive excerpt from “Chapter 13, Training and Evaluating Classical Machine Learning Systems and Neural Networks” in the book, Machine Learning Infrastructure and Best Practices for Software Engineers, by Miroslaw Staron, published in January 2024.

Training classical machine learning models

We’ll start by training a model that lets us look inside it. We’ll use the CART decision tree classifier, where we can visualize the actual decision tree that is trained. We’ll use

the same numerical data we used in the previous chapter. First, let’s read the data

and create the train/test split:

# read the file with data using openpyxl

import pandas as pd

# we read the data from the excel file,

# which is the defect data from the ant 1.3 system

dfDataAnt13 = pd.read_excel('./chapter_6_dataset_numerical.xlsx',

sheet_name='ant_1_3',

index_col=0)

# prepare the dataset

import sklearn.model_selection

X = dfDataAnt13.drop(['Defect'], axis=1)

y = dfDataAnt13.Defect

X_train, X_test, y_train, y_test = \

sklearn.model_selection.train_test_split(X, y, random_state=42, train_size=0.9)

The preceding code reads an Excel file named 'chapter_6_dataset_numerical.xlsx' using the pd.read_excel() function from pandas. The file is read into a DataFrame called dfDataAnt13. The sheet_name parameter specifies the sheet within the Excel file to read, while the index_col parameter sets the first column as the index of the DataFrame.

The code prepares the dataset for training a machine learning model. It assigns the independent variables (features) to the X variable by dropping the 'Defect' column from the dfDataAnt13 DataFrame using the drop() method. The dependent variable (target) is assigned to the y variable by selecting the 'Defect' column from the dfDataAnt13 DataFrame.

The sklearn.model_selection.train_test_split() function is used to split the dataset into training and testing sets. The X and y variables are split into X_train, X_test, y_train, and y_test variables. The train_size parameter is set to 0.9, indicating that 90% of the data will be used for training and the remaining 10% will be used for testing. The random_state parameter is set to 42 to ensure reproducibility of the split.

Once the data has been prepared, we can import the decision tree library and train the model:

# now that we have the data prepared

# we import the decision tree classifier and train it

from sklearn.tree import DecisionTreeClassifier

# first we create an empty classifier

decisionTreeModel = DecisionTreeClassifier()

# then we train the classifier

decisionTreeModel.fit(X_train, y_train)

# and we test it for the test set

y_pred_cart = decisionTreeModel.predict(X_test)

The preceding code fragment imports the DecisionTreeClassifier class from the sklearn.tree module. An empty decision tree classifier object is created and assigned to the decisionTreeModel variable. This object will be trained on the dataset that was prepared in the previous fragment. The fit() method is called on the decisionTreeModel object to train the classifier. The fit() method takes the training data (X_train) and the corresponding target values (y_train) as input. The classifier will learn patterns and relationships in the training data to make predictions.

The trained decision tree classifier is used to predict the target values for the test dataset (X_test). The predict() method is called on the decisionTreeModel object, passing X_test as the input. The predicted target values are stored in the y_pred_cart variable. The predicted model needs to be evaluated, so let’s evaluate the accuracy, precision, and recall of the model:

# now, let's evaluate the code

from sklearn.metrics import accuracy_score

from sklearn.metrics import recall_score

from sklearn.metrics import precision_score

print(f'Accuracy: {accuracy_score(y_test, y_pred_cart):.2f}')

print(f'Precision: {precision_score(y_test, y_pred_cart, average="weighted"):.2f}, Recall: {recall_score(y_test, y_pred_cart, average="weighted"):.2f}')

This code fragment results in the following output:

Accuracy: 0.83

Precision: 0.94, Recall: 0.83

The metrics show that the model is not that bad. It classified 83% of the data in the test set correctly. It is a bit more sensitive to the true positives (higher precision) than to true negatives (lower recall). This means that it tends to miss some of the defect-prone modules in its predictions. However, the decision tree model lets us take a look inside the model and explore the pattern that it learned from the data. The following code fragment does this:

from sklearn.tree import export_text

tree_rules = export_text(decisionTreeModel, feature_names=list(X_train.columns))

print(tree_rules)

The preceding code fragment exports the decision tree in the form of text that we print. The export_text() function takes two arguments – the first one is the decision tree to visualize and the next one is the list of features. In our case, the list of features is the list of columns in the dataset.

The entire decision tree is quite complex in this case, but the first decision path looks like this:

|--- WMC <= 36.00

| |--- ExportCoupling <= 1.50

| | |--- NOM <= 2.50

| | | |--- NOM <= 1.50

| | | | |--- class: 0

| | | |--- NOM > 1.50

| | | | |--- WMC <= 5.50

| | | | | |--- class: 0

| | | | |--- WMC > 5.50

| | | | | |--- CBO <= 4.50

| | | | | | |--- class: 1

| | | | | |--- CBO > 4.50

| | | | | | |--- class: 0

| | |--- NOM > 2.50

| | | |--- class: 0

This decision path looks very similar to a large if-then statement, which we could write ourselves if we knew the patterns in the data. This pattern is not simple, which means that the data is quite complex. It can be non-linear and requires complex models to capture the dependencies. It can also require a lot of effort to find the right balance between the performance of the model and its ability to generalize the data.

So, here is my best practice for working with this kind of model.

Best practice #54: If you want to understand your numerical data, use models that provide explainability.

In the previous chapters, I advocated for using AutoML models as they are robust and save us a lot of trouble finding the right module. However, if we want to understand our data a bit better and understand the patterns, we can start with models such as decision trees. Their insight into the data provides us with a good overview of what we can get out of the data.

As a counter-example, let’s look at the data from another module from the same dataset. Let’s read it and perform the split:

# read the file with data using openpyxl

import pandas as pd

# we read the data from the excel file,

# which is the defect data from the ant 1.3 system

dfDataCamel12 = pd.read_excel('./chapter_6_dataset_numerical.xlsx',

sheet_name='camel_1_2',

index_col=0)

# prepare the dataset

import sklearn.model_selection

X = dfDataCamel12.drop(['Defect'], axis=1)

y = dfDataCamel12.Defect

X_train, X_test, y_train, y_test = \

sklearn.model_selection.train_test_split(X, y, random_state=42, train_size=0.9)

Now, let’s train a new model for that data:

# now that we have the data prepared

# we import the decision tree classifier and train it

from sklearn.tree import DecisionTreeClassifier

# first we create an empty classifier

decisionTreeModelCamel = DecisionTreeClassifier()

# then we train the classifier

decisionTreeModelCamel.fit(X_train, y_train)

# and we test it for the test set

y_pred_cart_camel = decisionTreeModel.predict(X_test)

So far, so good – no errors, no problems. Let’s check the performance of the model:

# now, let's evaluate the code

from sklearn.metrics import accuracy_score

from sklearn.metrics import recall_score

from sklearn.metrics import precision_score

print(f'Accuracy: {accuracy_score(y_test, y_pred_cart_camel):.2f}')

print(f'Precision: {precision_score(y_test, y_pred_cart_camel, average="weighted"):.2f}, Recall: {recall_score(y_test, y_pred_cart_camel, average="weighted"):.2f}')

The performance, however, is not as high as it was previously:

Accuracy: 0.65

Precision: 0.71, Recall: 0.65

Now, let’s print the tree:

from sklearn.tree import export_text

tree_rules = export_text(decisionTreeModel, feature_names=list(X_train.columns))

print(tree_rules)

As we can see, the results are also quite complex:

|--- WMC > 36.00

| |--- DCC <= 3.50

| | |--- WMC <= 64.50

| | | |--- NOM <= 17.50

| | | | |--- ImportCoupling <= 7.00

| | | | | |--- NOM <= 6.50

| | | | | | |--- class: 0

| | | | | |--- NOM > 6.50

| | | | | | |--- CBO <= 4.50

| | | | | | | |--- class: 0

| | | | | | |--- CBO > 4.50

| | | | | | | |--- ExportCoupling <= 13.00

| | | | | | | | |--- NOM <= 16.50

| | | | | | | | | |--- class: 1

| | | | | | | | |--- NOM > 16.50

| | | | | | | | | |--- class: 0

| | | | | | | |--- ExportCoupling > 13.00

| | | | | | | | |--- class: 0

| | | | |--- ImportCoupling > 7.00

| | | | | |--- class: 0

| | | |--- NOM > 17.50

| | | | |--- class: 1

| | |--- WMC > 64.50

| | | |--- class: 0

If we look at the very first decision in this tree and the previous one, it is based on the WMC feature. WMC means weighted method per class and is one of the classical software metrics that was introduced in the 1990s by Chidamber and Kamerer. The metric captures both the complexity and the size of the class (in a way) and it is quite logical that large classes are more defect-prone – simply because there is more chance to make a mistake if there is more source code. In the case of this model, this is a bit more complicated as the model recognizes that the classes with WMC over 36 are more prone to errors than others, apart from classes that are over 64.5, which are less prone to errors. The latter is also a known phenomenon that large classes are also more difficult to test and therefore can contain undiscovered defects.

Here is my next best practice, which is about the explainability of models.

Best practice #55: The best models are those that capture the empirical phenomena in the data.

Although machine learning models can capture any kind of dependencies, the best models are the ones that can capture logical, empirical observations. In the previous examples, the model could capture the software engineering empirical observations related to the size of the classes and their defect-proneness. Having a model that captures empirical relations leads to better products and explainable AI.

Packt subscribers can continue reading the chapter for free here. You can buy Machine Learning Infrastructure and Best Practices for Software Engineers, by Miroslaw Staron, here.

And that’s a wrap.

We have an entire range of newsletters with focused content for tech pros. Subscribe to the ones you find the most useful here. The complete PythonPro archives can be found here.

If you have any suggestions or feedback, or would like us to find you a Python learning resource on a particular subject, take the survey or just respond to this email!