PythonPro #31: Optimize ML Model Training with PyTorch 2.X, Essential Data Manipulation with Pandas, and Django ASGI Insights

Bite-sized actionable content, practical tutorials, and resources for Python programmers and data scientists

Welcome to a brand new issue of PythonPro!

In today’s Expert Insight we bring you an excerpt from the recently published book,

Accelerate Model Training with PyTorch 2.X, which discusses the various elements that contribute to the computational cost of training machine learning models and how to optimize these for more efficient training.

News Highlights: The Transparent Tribe hacking group targets India's government, defense, and aerospace sectors, and Tensorlake's Indexify is revolutionizing LLM applications by allowing real-time data extraction from various sources.

Here are my top 5 picks from our learning resources today:

Dive in, and let me know what you think about this issue in this month’s survey!

Stay awesome!

Divya Anne Selvaraj

Editor-in-Chief

P.S: Today is your last chance to participate in this month’s survey. If you haven’t yet, we invite you to take the opportunity to tell us what you think about PythonPro so far, request a learning resource for future issues, tell us what you think about a recent development in the Python world, and earn a Packt Credit to buy a book of your choice. Take the survey!

🐍 Python in the Tech 💻 Jungle 🌳

🗞️News

Hackers Deploy Python, Golang, and Rust Malware on Indian Targets: The Transparent Tribe hacking group, has been targeting the Indian government, defense, and aerospace sectors through a sophisticated malware campaign. Read to learn more.

Indexify by Tensorlake - An Open-source real time data framework for LLM applications: The framework allows for the extraction and querying of data from diverse sources like video, audio, and PDFs in near real-time. Read to learn how Indexify enables the development of responsive, up-to-date LLM applications.

💼Case Studies and Experiments🔬

Real-Time Brainwave Visualization - Integrating Muse EEG with Python: This article outlines the development of a Python application that streams EEG data from a Muse headband. Read for a detailed account involving the use of Python-OSC to read OSC streams and PyQt6 for rapid GUI development.

CONTEXTS.py (CS.py) - A supervised contextual post-classification method to access multiple dimensions of complex geospatial objects: This paper introduces a new Python plugin for QGIS designed to identify and extract complex spatial objects from Earth imagery. Read to learn about the capabilities of CS.py.

📊Analysis

Why enterprises rely on JavaScript, Python, and Java: These languages remain the top programming languages for enterprise applications due to their proven stability, widespread use, and ongoing enhancements. Read to learn why Python continues to maintain its relevance.

How Python Compares Floats and Ints - When Equals Isn’t Really Equal: This article reveals discrepancies due to precision limits in floating-point representation and Python's infinite precision integers. Read to gain insight into why seemingly equivalent numbers can produce different comparison results.

🎓 Tutorials and Guides 🤓

The Python calendar Module - Create Calendars With Python: This tutorial covers displaying calendars in the terminal, creating text and HTML calendars, formatting for specific locales, and using advanced calendar functions. Read to enhance your ability to handle date and time data in Python applications.

How to Create Pivot Tables With pandas: This tutorial discusses the use of DataFrame.pivot_table() for data summarization and analysis. Read to learn how to build and manipulate pivot tables for sophisticated data analysis and visualization.

How to Iterate Over Multiple Lists Sequentially in Python: Key techniques discussed in the article include using itertools.chain() for sequential access across lists, nested for loops for list of lists, the unpacking star (*) operator in Python 3+, and itertools.izip() for parallel iteration across multiple lists. Read to learn more.

How To Create Daily Forecasts with A Python Weather API: This article teaches you how to use the Tomorrow.io API in Python. Read to enhance your ability to integrate real-time weather data into applications.

GraphQL-like features in Django Rest Framework (DRF): This article discusses enhancing DRF with GraphQL-like features using drf-flex-fields. Read to learn how to implement flexible, efficient data queries in DRF.

How to spend less time writing Django tests: This article shares insights on reducing the time spent writing Django tests by using Kolo, a tool for autogenerating integration tests. Read to learn how to enable faster development cycles and more reliable software deployments.

A Guide to Generating and Understanding SBOMs with Docker and Django-CMS: This guide highlights the importance of including all components, especially hard dependencies, in the SBOM to ensure comprehensive coverage. Read to learn about effective strategies for generating and managing SBOMs.

🔑Best Practices, Advice, and Code Optimization🔏

dict() is More Versatile Than You May Think: This article discusses creating empty dictionaries, copying dictionaries, and constructing them from mappings, iterables of pairs, and keyword arguments. Read to discover the efficiency of using {} over dict() for empty dictionaries and more.

Testing with Python (part 5) - the different types of tests: This article categorizes and explains various types of tests in Python, focusing on unit, integration, end-to-end, and property tests. Read to gain an understanding of the different testing methodologies and how to apply them correctly.

Statically Typed Functional Programming with Python 3.12: This article promotes declarative operations, pattern matching in functions, and more to avoid complex class hierarchies and excessive exception use. Read to learn how to implement statically typed functional programming.

Python - Mock an inner import: Mocking inner imports in Python, essential for testing, can bypass the limitation of traditional mock.patch. Read to learn how to effectively mock inner imports in Python to ensure accurate and comprehensive testing of functions with dynamic import statements.

ASGI deployment options for Django: Key servers discussed in the article include Daphne, Hypercorn, Uvicorn, and Granian. Read to learn about the need for ASGI servers to serve asynchronous applications effectively.

Take the Survey, Get a Packt credit!

🧠 Expert insight 📚

Here’s an excerpt from “Chapter 1: Deconstructing the Training Process” in the book, Accelerate Model Training with PyTorch 2.X by Maicon Melo Alves, published in May 2024.

Understanding the computational burden of the model training phase

…By using the terms computational cost or burden, we mean the computing power needed to execute the training process.

The higher the computational cost, the

higher the time taken to train the model. In the same way, the higher the computational burden, the higher the computing resources required to train the model.

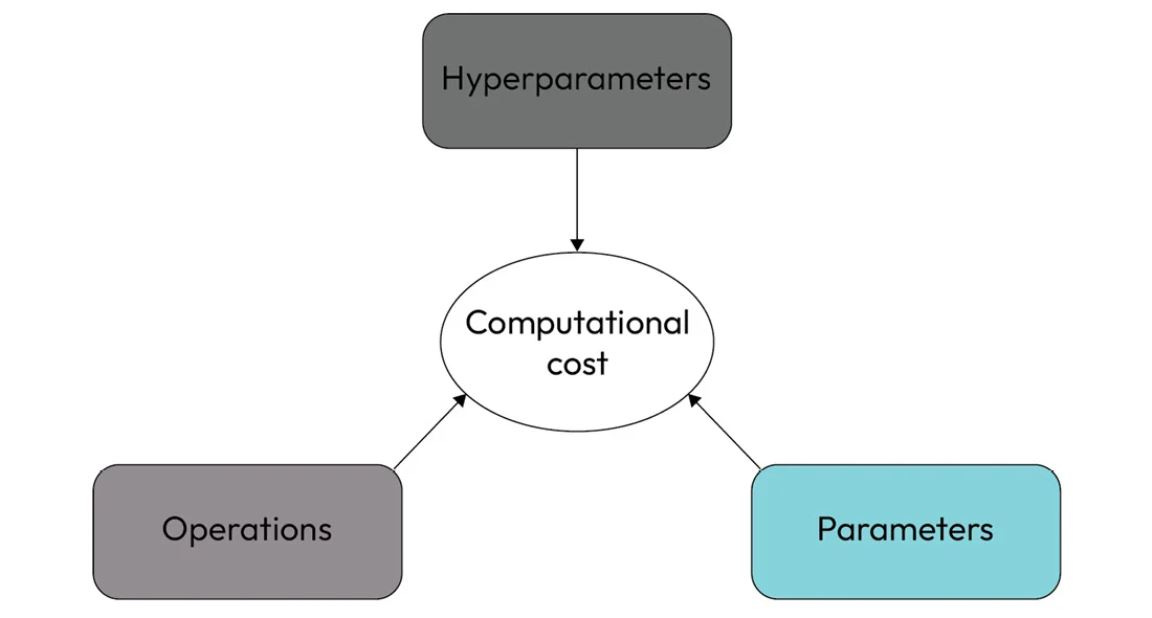

Essentially, we can say the computational burden to train a model is defined by a three-fold factor, as illustrated in Figure 1.6:

Figure 1.6 – Factors that influence the training computational burden

Each one of these factors contributes (to some degree) to the computational complexity imposed by the training process. Let’s talk about each one of them.

Hyperparameters

Hyperparameters define two aspects of neural networks: the neural network configuration and how the training algorithm works.

Concerning neural network configuration, the hyperparameters determine the number and type of layers and the number of neurons in each layer. Simple networks have a few layers and neurons, whereas complex networks have thousands of neurons spread in hundreds of layers. The number of layers and neurons determines the number of parameters of the network, which directly impacts the computational burden. Due to the significant influence of the number of parameters in the computational cost of the training step, we will discuss this topic later in this chapter as a separate performance factor.

Regarding how the training algorithm executes the training process, hyperparameters control the number of epochs and steps and determine the optimizer and loss function used during the training phase, among other things. Some of these hyperparameters have a tiny influence on the computational cost of the training process. For example, if we change the optimizer from SGD to Adam, we will not face any relevant impact on the computational cost of the training process.

Other hyperparameters can definitely raise the training phase time, though. One of the most emblematic examples is the batch size. The higher the batch size, the fewer training steps are needed to train a model. So, with a few training steps, we can speed up the building process since the training phase will execute fewer steps per epoch. On the other hand, we can spend more time executing a single training step if we have big batch sizes. This happens because the forward phase executed on each training step should deal with a higher dimensional input data. In other words, we have a trade-off here.

For example, consider the case of a batch size equal to 32 for the Fashion-MNIST dataset. In this case, the input data dimension is 32 x 1 x 28 x 28, where 32, 1, and 28 represent the batch size, the number of channels (colors, in this scenario), and the image size, respectively. Therefore, for this case, the input data comprises 25,088 numbers, which is the number of numbers the forward phase should compute. However, if we increase the batch size to 128, the input data changes to 100,352 numbers, which can result in a longer time to execute a single forward phase iteration.

In addition, a bigger input sample requires a higher amount of memory to execute each training step. Depending on the hardware configuration, the amount of memory required to execute the training step can drastically reduce the performance of the entire training process or even make it impossible to execute in that hardware. Conversely, we can accelerate the training process by using hardware endowed with huge memory resources. This is why we need to know the details of the hardware resources we use and what factors influence the computational complexity of the training process.

We will dive into all of these issues throughout the book.

Operations

We already know each training step executes four training phases: forward, loss computation, optimization, and backward. In the forward phase, the neural network receives the input data and processes it according to the neural network’s architecture. Besides other things, the architecture defines the network layers, where each layer has one or more operations that the network executes during the forward phase.

For example, a fully connected neural network (FCNN) usually executes general matrix-to-matrix multiplication operations, whereas convolutional neural networks (CNNs) execute special computer vision operations such as convolution, padding, and pooling. It turns out that the computational complexity of one operation is not the same as another. So, depending on the network architecture and the operations, we can get distinct performance behavior.

Nothing is better than an example, right? Let’s define a class to instantiate a traditional CNN model that is able to deal with the Fashion-MNIST dataset.

Important note

The complete code shown in this section is available here.

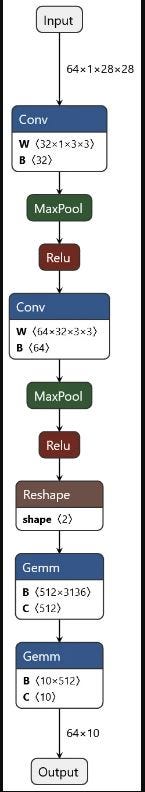

This model receives an input sample of size 64 x 1 x 28 x 28. This means the model receives 64 grayscale images (one channel) with a height and width equal to 28 pixels. As a result, the model outputs a tensor of dimension 64 x 10, which represents the probability of the image belonging to each of the 10 categories of the Fashion-MNIST dataset.

The model has two convolutional and two fully connected layers. Each convolutional layer comprises one bidimensional convolution, the rectified linear unit (ReLU) activation function, and pooling. The first fully connected layer has 3,136 neurons connected to the 512 neurons of the fully connected second layer. The second layer is then connected to the 10 neurons of the output layer.

Important note

If you are unfamiliar with CNN models, it would be useful to watch the video What is a convolutional neural network (CNN)? from the Packt YouTube channel here.

By exporting this model to the ONNX format, we get the diagram illustrated in Figure 1.7:

Figure 1.7 – Operations of a CNN model

Important note

The Open Neural Network Exchange (ONNX) is an open standard for machine learning interoperability. Besides other things, ONNX provides a standard format to export neural network models from many distinct frameworks and tools. We can use the ONNX file to inspect model details, import it into another framework, or execute the inference process.

By evaluating Figure 1.7, we can see five distinct operations:

Conv: Bidimensional convolution

MaxPool: Max pooling

Relu: Activation function (ReLU)

Reshape: Tensor dimensional transformation

Gemm: General matrix multiplication

So, under the hood, the neural network executes these operations in the forward phase. From a computing perspective, this is the set of real operations that the machine runs during each training step. Therefore, we can rethink the training process of this model in terms of its operations and write it as a simpler algorithm:

for each epoch for each training step

result = conv(input)

result = maxpool(result)

result = relu(result)

result = conv(result)

result = maxpool(result)

result = relu(result)

result = reshape(result)

result = gemm(result)

result = gemm(result)

loss = calculate_loss(result)

gradient = optimization(loss)

backwards(gradient)

As you can see, the training process is just a set of operations executed one after another. Despite the functions or classes used to define the model, the machine is actually running this set of operations.

It turns out that each operation has a particular computational complexity, thus requiring distinct levels of computing power and resources to be executed satisfactorily. In this way, we can face different performance gains and bottlenecks for each one of those operations. Similarly, some operations can be more suitable to execute in a given hardware architecture, as we will see throughout the book.

To obtain the practical meaning of this topic, we can check the percentage of time these operations spent during the training step. So, let’s use PyTorch Profiler to get the percentage of CPU usage for each operation. The following list resumes the CPU usage when running the forward phase of our CNN model with one input sample of the Fashion-MNIST dataset:

aten::mkldnn_convolution: 44.01%aten::max_pool2d_with_indices: 30.01%

aten::addmm: 13.68%

aten::clamp_min: 6.96%

aten::convolution: 1.18%

aten::copy_: 0.70%

aten::relu: 0.59%

aten::_convolution: 0.49%

aten::empty: 0.35%

aten::_reshape_alias: 0.31%

aten::t: 0.31%

aten::conv2d: 0.24%

aten::as_strided_: 0.24%

aten::reshape: 0.21%

aten::linear: 0.21%

aten::max_pool2d: 0.17%

aten::expand: 0.14%

aten::transpose: 0.10%

aten::as_strided: 0.07%

aten::resolve_conj: 0.00%

Important note

ATen is a C++ library used by PyTorch to execute basic operations. You can find more information about this library here.

The results show the Conv operation (labeled here as aten::mkldnn_convolution) presented higher CPU usage (44%), followed by the MaxPool operation (aten:: max_pool2d_with_indices), with 30% CPU usage. On the other hand, the ReLU (aten::relu) and Reshape (aten::reshape) operations consumed less than 1% of the total CPU usage. Finally, the Gemm operation (aten::addmm) used around 14% of the CPU time.

From this simple profiling test, we can assert the operations involved in the forward phase; hence, in the training process, there are distinct levels of computational complexity. We can see the training process consumed much more CPU cycles when executing the Conv operation than the Gemm operation. Notice that our CNN model has two layers comprising both operations. Thus, in this example, both operations are executed the same number of times.

Based on this knowledge about the distinct computational burden of neural network operations, we can choose the best hardware architecture or software stack to reduce the execution time of the predominant operation of a given neural network. For example, suppose we need to train a CNN composed of dozens of convolutional layers. In that case, we will look for hardware resources endowed with special capabilities to execute Conv operations more efficiently. Even though the model has some fully connected layers, we already know that the Gemm operation can be less computationally intensive than Conv. This justifies prioritizing a hardware resource that is able to accelerate convolutional operations to train that model.

Parameters

Besides hyperparameters and operations, the neural network parameters are another factor that has a relevant influence on the computational cost of the training process. As we discussed earlier, the number and type of layers in the neural network configuration define the total number of parameters on the network.

Obviously, the higher the number of parameters, the higher the computational burden of the training process. These parameters comprise kernel values employed on convolutional operations, biases, and the weights of connections between neurons.

Our CNN model, with just 4 layers, has 1,630,090 parameters. We can easily count the total number of parameters in PyTorch by using this function:

def count_parameters(model): parameters = list(model.parameters())

total_parms = sum(

[np.prod(p.size()) for p in parameters if p.requires_grad])

return total_parms

If we add an extra fully connected layer with 256 neurons to our CNN model and rerun this function, we will get 1,758,858 parameters in total, representing an increase of nearly 8%.

After training and testing this new CNN model, we got the same accuracy as before. Then, paying attention to the trade-off between network complexity and model accuracy is essential. On many occasions, increasing the number of layers and neurons will not necessarily result in better efficiency but will possibly increase the time of the training process.

Another aspect of parameters is the numeric precision used to represent these numbers in the model. …keep in mind that the number of bytes used to represent parameters pays a relevant contribution to the time needed to train a model. So, not only does the number of parameters have an impact on training time but so does the numeric precision chosen to represent these numbers in the model.

You can buy Accelerate Model Training with PyTorch 2.X by Maicon Melo Alves, here. Packt library subscribers can continue reading the entire book for free here.

And that’s a wrap.

We have an entire range of newsletters with focused content for tech pros. Subscribe to the ones you find the most useful here. The complete PythonPro archives can be found here.

If you have any suggestions or feedback, or would like us to find you a Python learning resource on a particular subject, take the survey or leave a comment below!