PythonPro #32: Building LLM Powered Applications, MicroPython 1.23 Enhancements, and HarvardX AI Course

Bite-sized actionable content, practical tutorials, and resources for Python programmers and data scientists

Welcome to a brand new issue of PythonPro!

In today’s Expert Insight we bring you an excerpt from the recently published book,

Building LLM Powered Applications, which briefly discusses the accessibility and flexibility of pre-trained LLMs and the methods available for customizing them to meet specific domain needs.

News Highlights: MicroPython 1.23 introduces custom USB device support for HID and MIDI devices; DuckDB enhances Python analytics with fast processing and integration for medium data sizes; and dlt-init-openapi automates data pipeline creation from OpenAPI specs, streamlining dataset setup.

Here are my top 5 picks from our learning resources today:

Free Course | HarvardX - CS50's Introduction to Artificial Intelligence with Python🤖

Comparing Node.js and Python Performance with the Official OpenAI Client🏁

Using Queues and Stacks in Python with Its collections Library📚

bytes - The Lesser-Known Python Built-In Sequence • And Understanding UTF-8 Encoding💾

Dive in, and let me know what you think about this issue in this month’s survey!

Stay awesome!

Divya Anne Selvaraj

Editor-in-Chief

P.S: Thank you to everyone who participated in last month's survey, particularly those who shared their views on using Low-Cost LLMs for Python code generation. We've included a section in today's newsletter to briefly discuss the topic. This month, we have a fresh survey question about whether deep learning models will entirely supplant the need for manual feature engineering based on the findings of a recent paper. We invite you to express your opinions, provide feedback on PythonPro, and earn your one Packt credit for this month. Take the survey!

🐍 Python in the Tech 💻 Jungle 🌳

🗞️News

MicroPython 1.23 brings custom USB devices, OpenAMP, much more: The new version introduces exciting features like custom USB device support, allowing for the creation of HID, MIDI devices, and more using user-friendly libraries. Read to learn more about the latest capabilities.

DuckDB - In-Process Python Analytics for Not-Quite-Big Data: Highlighted at PyCon last week, DuckDB's latest enhancements include exceptional processing speeds and direct integration into Python applications, making it ideal for data sizes usually reserved for systems like Apache Spark. Read to learn more.

dlt OpenAPI Source Creator - dlt-init-openapi: The authors of the data load tool (dlt) have introduced dlt-init-openapi, a Python CLI tool that automates the creation of dlt data pipelines from OpenAPI specifications, reducing dataset creation time to mere minutes. Read their Colab demo to learn more.

💼Case Studies and Experiments🔬

Predictive Modeling for Agriculture - A DataCamp Project: This project demonstrates how to use machine learning to assist a farmer in selecting the best crop based on soil conditions. Read to learn how to apply feature selection and predictive modeling techniques.

Empowering Operations - Grafana Proxy Integration with Django: This article details the integration of Grafana dashboards with a Django application to manage differentiated access for internal and external users. Read to learn how to ensure seamless and secure operations.

📊Analysis

Comparing Node.js and Python Performance with the Official OpenAI Client: In a performance comparison using the OpenAI client for high-volume API requests, Node.js significantly outperformed Python. Read for insights into the advantages of Node.js over Python.

Do I not like Ruby anymore?: Initially skeptical of Python, the author of this article, a long-time Ruby enthusiast, has grown to appreciate Python's features such as type hints, match statements, and its function-based syntax. Read to gain insight into how the evolution of programming languages influence developer preferences.

🎓 Tutorials and Guides 🤓

👩🏫Free Course | HarvardX - CS50's Introduction to Artificial Intelligence with Python: This 7-week, self-paced introductory course explores the foundational concepts and algorithms used in technologies such as game engines and machine translation. Read to learn more.

What Are CRUD Operations?: This guide elaborates on CRUD operations in SQL, demonstrates their execution using Python's SQLAlchemy and FastAPI, and correlates them with HTTP request methods in REST APIs. Read to learn how to implement CRUD applications.

📖Open Access Book | Think Python, 3rd edition: This book is an introductory guide to Python programming, presented in an interactive format using Jupyter notebooks to help beginners and those who have struggled with programming learn effectively. Read to dive in.

Mapping World UNHCR Refugee Data With Python Streamlit And GPT-4: This tutorial explains how to create dynamic web applications to visualize refugee movements using Plotly for mapping and Streamlit for web integration. Read to learn how to build interactive, web-based data visualizations.

Python Notebooks for Fundamentals of Music Processing (FMP): This collection of notebooks provides educational material on FMP, integrating theory with Python code examples for teaching and researching Music Information Retrieval (MIR). Read to learn FMP through interactive Jupyter notebooks.

Equality versus identity in Python: Equality checks if two variables represent the same data, while identity checks if two variables point to the exact same object. Read to l learn the conceptual differences between checking for equality and identity in Python.

Signal Noise Removal Autoencoder with Keras: This article introduces a Keras-based autoencoder for noise removal in signal processing. Read to learn how to implement an autoencoder in Keras to enhance data quality in various signal processing applications.

🔑Best Practices, Advice, and Code Optimization🔏

bytes - The Lesser-Known Python Built-In Sequence • And Understanding UTF-8 Encoding: This article explains bytes as immutable sequences of integers (0-255) used for encoding text data in Python, and how strings can be converted to bytes using ASCII and UTF-8 encoding methods. Read to be able to handle modern and international texts.

Python: Libraries you should use — Part-1: This article introduces underutilized Python libraries enhancing coding efficiency and error handling, like 'result' for parallel path programming and clearer error management, and 'loguru' for simplified logging. Read to learn more.

Using Queues and Stacks in Python with Its collections Library: This article discusses the benefits of using Python's built-in collections library, particularly the deque object, for implementing efficient queues and stacks. Read to learn about the practical benefits of using deque for queue and stack operations in Python.

Can I still be a data engineer if I don’t know Python?: The author, a self-taught data engineer, reflects on her career predominately using SQL over Python, emphasizing the feasibility of excelling in data engineering with a focus on SQL. Read to gain insight into the flexible nature of data engineering roles.

Object(Instance) and Class Attributes in Python: This article explains the differences between class attributes and instance attributes in Python, including how they are created, managed, and their respective advantages and disadvantages. Read to enhance your ability to write effective and efficient object-oriented code.

🤖Low-Cost Language Models: Making Python Coding Easier?💻

In last month’s survey, we asked you to take a look at the paper titled “Low-Cost Language Models: Survey and Performance Evaluationon Python Code Generation” by Espejel et al., and asked for your opinion on the impact of using such models in your workflow. Let’s take a quick look at the main points of the paper and what you had to say.

According to Espejel et al.’s paper, in the world of artificial intelligence, you no longer need high-end hardware to generate Python code effectively. The study investigated the potential of using simpler models that perform well on standard computers, bypassing the need for specialized hardware like GPUs.

Key Findings

CPU-friendly models are now as effective as advanced chatbot models like ChatGPT-3.5, ChatGPT-4, and Gemini, which require extensive GPU resources.

Models like dolphin-2.6-mistral-7b excel at generating Python code but often fail to match the specified output formats, leading to penalties despite correct code.

In contrast, models like mistral show consistent performance in understanding prompts and producing accurate code, achieving better results on our dataset but performing less well on HumanEval and EvalPlus datasets.

The llama.cpp project has successfully demonstrated that sophisticated language models can run on standard computers, marking significant progress from just a few months ago.

What This Means

This advancement means that more individuals and small businesses can access sophisticated AI tools without significant financial outlays. It democratizes the use of these technologies, enabling broader experimentation and integration in various projects.

Examining the Details

The study employed 60 programming problems with varying levels of difficulty and two state-of-the-art datasets including HumanEval and EvalPlus. The paper noted that while models like llama.cpp approach the efficiency of their more costly counterparts, they still encounter difficulties with intricate tasks. The researchers applied a new technique known as Chain-of-Thought (CoT) prompting to better align the models' outputs with user needs.

What you said

Here is what some of you had to say on the subject of intergrating LLMs in your workflow:

Ali Saleh: “I think it helps with boilerplate code and writing unit tests but I don't trust it enough to use it for anything more serious. The hallucinations are just too annoying and it (the LLM) cannot reason about its output or debug it properly. I'd use it cautiously.

I've been using copilot for some time. It's good for simple boilerplate automation but I don't trust it with anything more serious since it had suggested wrong or buggy code way too many times and I need to make sure the code does what I think it does.”

Gordon Margulieux: “LLMs may be helpful in screening for particular issues, but unless (you want) to ask the AI to check for something specific, it probably will miss the issue. However, LLMs will force the programmer to better define their requirements up front.”

Camilo Munoz: I see great potential in code generation. ..(I feel it) can speed up my performance.

Anonymous: “I use multiple LLMs daily for both development and personal use. They're great tools, but it's also bringing to light how lax some people are with their secops, which is super scary.”

It is clear that while you appreciate low-cost LLMs for generating boilerplate code and conducting unit tests in a professional setting, you recognize the need to remain cautious about their use for more complex tasks due to inaccuracies and an inability to debug effectively. You have also noted the possibility of significant productivity gains, and cautioned against potential security risks, underscoring the need for careful application and awareness of the limitations of Low-Cost LLMs in critical coding scenarios.

Thank you all. We have sent one additional Packt credit to each of these four respondents for sharing their valuable thoughts with us. Do join the conversation in this month’s survey.

🧠 Expert insight 📚

Here’s an excerpt from “Chapter 1:

Introduction to Large Language Models” in the book, Building LLM Powered Applications by Valentina Alto, published in May 2024.

Base models versus customized models

The nice thing about LLMs is that they have been trained and ready to use. As we saw in the previous section, training an LLM requires great investment in hardware (GPUs or TPUs) and it might last for

months, and these two factors might mean it is not feasible for individuals and small businesses.

Luckily, pre-trained LLMs are generalized enough to be applicable to various tasks, so they can be consumed without further tuning directly via their REST API (we will dive deeper into model consumption in the next chapters).

Nevertheless, there might be scenarios where a general-purpose LLM is not enough, since it lacks domain-specific knowledge or doesn’t conform to a particular style and taxonomy of communication. If this is the case, you might want to customize your model.

How to customize your model

There are three main ways to customize your model:

Extending non-parametric knowledge: This allows the model to access external sources of information to integrate its parametric knowledge while responding to the user’s query.

Definition: LLMs exhibit two types of knowledge: parametric and non-parametric. The parametric knowledge is the one embedded in the LLM’s parameters, deriving from the unlabeled text corpora during the training phase. On the other hand, non-parametric knowledge is the one we can “attach” to the model via embedded documentation. Non-parametric knowledge doesn’t change the structure of the model, but rather, allows it to navigate through external documentation to be used as relevant context to answer the user’s query.

This might involve connecting the model to web sources (like Wikipedia) or internal documentation with domain-specific knowledge. The connection of the LLM to external sources is called a plug-in, and we will be discussing it more deeply in the hands-on section of this book.

Few-shot learning: In this type of model customization, the LLM is given a metaprompt with a small number of examples (typically between 3 and 5) of each new task it is asked to perform. The model must use its prior knowledge to generalize from these examples to perform the task.

Definition: A metaprompt is a message or instruction that can be used to improve the performance of LLMs on new tasks with a few examples.

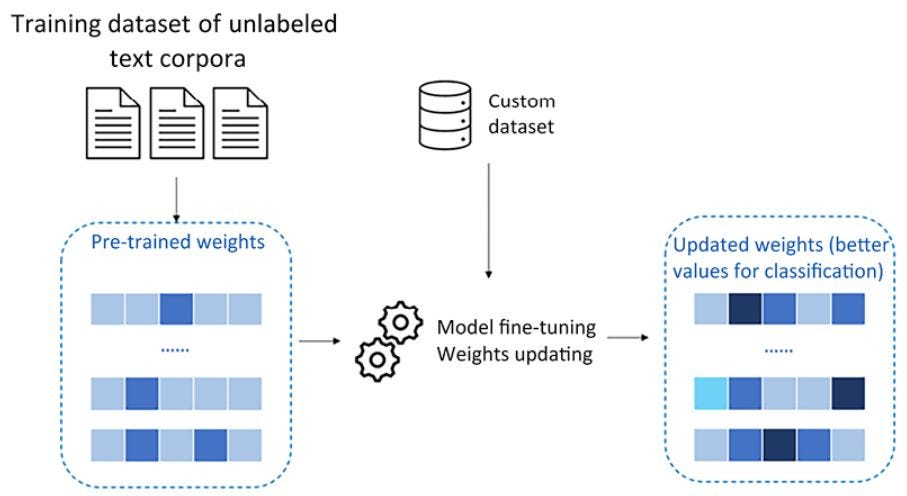

Fine tuning: The fine-tuning process involves using smaller, task-specific datasets to customize the foundation models for particular applications.

This approach differs from the first ones because, with fine-tuning, the parameters of the pre-trained model are altered and optimized toward the specific task. This is done by training the model on a smaller labeled dataset that is specific to the new task. The key idea behind fine-tuning is to leverage the knowledge learned from the pre-trained model and fine-tune it to the new task, rather than training a model from scratch.

Figure 1.12: Illustration of the process of fine-tuning

In the preceding figure, you can see a schema on how fine-tuning works on OpenAI pre-built models. The idea is that you have available a pre-trained model with general-purpose weights or parameters. Then, you feed your model with custom data, typically in the form of “key-value” prompts and completions:

{"prompt": "<prompt text>", "completion": "<ideal generated text>"}

{"prompt": "<prompt text>", "completion": "<ideal generated text>"}

{"prompt": "<prompt text>", "completion": "<ideal generated text>"}

...

Once the training is done, you will have a customized model that is particularly performant for a given task, for example, the classification of your company’s documentation.

The nice thing about fine-tuning is that you can make pre-built models tailored to your use cases, without the need to retrain them from scratch, yet leveraging smaller training datasets and hence less training time and compute. At the same time, the model keeps its generative power and accuracy learned via the original training, the one that occurred to the massive dataset.

In Chapter 11, Fine-Tuning Large Language Models, we will focus on fine-tuning your model in Python so that you can test it for your own task.

You can read the entire first chapter for free and buy Building LLM Powered Applications by Valentina Alto, here. Packt library subscribers can continue reading the entire book for free here.

And that’s a wrap.

We have an entire range of newsletters with focused content for tech pros. Subscribe to the ones you find the most useful here. The complete PythonPro archives can be found here.

If you have any suggestions or feedback, or would like us to find you a Python learning resource on a particular subject, take the survey or leave a comment below!