PythonPro #51: Python 3.13 REPL Enhancements, Python 3.12 vs. 3.13, and Visualizing Named Entities in Text

Welcome to a brand new issue of PythonPro!

In today’s Expert Insight we bring you an excerpt from the recently published book, Python Natural Language Processing Cookbook - Second Edition, which explains how to use the displaCy library from spacy to visualize named entities in text.

News Highlights: PEP 762 in Python 3.13 adds multi-line editing, syntax highlighting, and custom commands to the REPL, and Pyinstrument 5 introduces a flamegraph timeline view for better code execution visualization.

Here are my top 5 picks from our learning resources today:

Exploring Infrastructure as Code (IaC) with Python: AWS CDK, Terraform CDK, and Pulumi🏗️

lintsampler: a new way to quickly get random samples from any distribution🎲

And, today’s Featured Study, presents a method using LLMs to generate precise, transparent code transformations, improving accuracy and efficiency for compiler optimizations and legacy refactoring.

Stay awesome!

Divya Anne Selvaraj

Editor-in-Chief

P.S.: This month's survey is still live, do take the opportunity to leave us your feedback, request a learning resource, and earn your one Packt credit for this month.

🐍 Python in the Tech 💻 Jungle 🌳

🗞️News

PEP 762 – REPL-acing the default REPL: As of Python 3.13, the default REPL has been replaced with a Python-based version (PEP 762), offering modern features like multi-line editing, syntax highlighting, and custom commands.

Pyinstrument 5 - Flamegraphs for Python: The new version of the Python statistical profiler introduces a new flamegraph-style timeline view for visualizing code execution, improves on previous timeline modes, and more.

💼Case Studies and Experiments🔬

Moving all our Python code to a monorepo: pytendi: Describes the migration of Attendi’s Python codebase into a monorepo using the Polylith architecture to improve code discoverability, reusability, and developer experience.

How Maintainable is Proficient Code? A Case Study of Three PyPI Libraries: Aims to help you recognize when proficient coding might hinder future maintenance efforts.

📊Analysis

In the Making of Python Fitter and Faster: Provides insights into how Python's evolving interpreter architecture enhances execution speed, memory efficiency, and overall performance for modern applications.

Python 3.12 vs Python 3.13 – performance testing: Tests on AMD Ryzen 7000 and Intel 13th-gen processors show Python 3.13 generally performs faster, especially in asynchronous tasks, but there are slowdowns in certain areas.

🎓 Tutorials and Guides 🤓

Build a Contact Book App With Python, Textual, and SQLite: Covers creating the app’s text-based interface (TUI), setting up a SQLite database for contact storage, and integrating both elements.

Syntactic Sugar: Why Python Is Sweet and Pythonic: Covers various Pythonic constructs like operators, assignment expressions, loops, comprehensions, and decorators, and shows how they simplify code.

The Ultimate Guide to Error Handling in Python: Provides a comprehensive guide to Python error handling, exploring common patterns like "Look Before You Leap" (LBYL) and "Easier to Ask Forgiveness than Permission" (EAFP).

Exploring Infrastructure as Code (IaC) with Python: AWS CDK, Terraform CDK, and Pulumi: Explains how Python integrates with IaC tools to automate cloud infrastructure management.

Web scraping of a dynamic website using Python with HTTP Client: Walks you through analyzing sites with JavaScript-rendered content and using the Crawlee framework to extract data in JSON format.

lintsampler: a new way to quickly get random samples from any distribution: Introduces a Python package designed to easily and efficiently generate random samples from any probability distribution.Mastering Probability with Python: A Step-by-Step Guide with Simulations: Through examples like coin tosses, dice rolls, and event probabilities, this tutorial guides you on how to simulate and analyze real-world scenarios.

🔑Best Practices and Advice🔏

What's In A List—Yes, But What's *Really* In A List: Explains common pitfalls when multiplying lists and why it matters when working with mutable versus immutable data types.

Yes, you need to duplicate your frontend business logic on the server: Explains why backend validation is essential to protect data integrity, regardless of frontend sophistication.

Python and SysV shared memory: Explains how to wrap C functions like

shmget,shmat, andshmctlfor shared memory management, handling void pointers, and performing basic operations like writing to shared memory.Gradient-Boosting anything (alert: high performance): Explores using Gradient Boosting with various machine learning models, adapting LSBoost in the Python package mlsauce for both regression and classification tasks.

Code Generation with ChatGPT o1-preview as a Story of Human-AI Collaboration: Through experiments in Python and C++, the author demonstrates that human-AI collaboration improves code generation, specifically in building sentiment analysis tools.

🔍Featured Study: Don't Transform the Code, Code the Transforms💥

In "Don't Transform the Code, Code the Transforms: Towards Precise Code Rewriting using LLMs," researchers from Meta, Cummins et al., introduce a novel method called Code the Transforms (CTT), which leverages LLMs to generate precise code transformations rather than directly rewriting code.

Context

Code transformation refers to rewriting or optimising existing code, a task essential for compiler optimisations, legacy code refactoring, or performance improvements. Traditional rule-based approaches to code transformations are difficult to implement and maintain. LLMs offer the potential to automate this process, but direct code rewriting by LLMs lacks precision and is challenging to debug. This study introduces the CTT method, where LLMs generate the transformation logic, making the process more transparent and adaptable.

Key Featured of the CTT Method

Chain-of-thought process: The method synthesises code transformations by iterating through input/output examples to create a precise transformation logic rather than rewriting code directly.

Improved transparency and adaptability: The generated transformations are explicit, making them easier to inspect, debug, and modify when necessary.

Higher precision: The method achieved perfect precision in 7 out of 16 Python code transformations, significantly outperforming traditional direct rewriting approaches.

Reduced computational costs: By generating transformation logic instead of rewriting code, the method requires less compute and review effort compared to direct LLM rewriting.

Iterative feedback loop: The method incorporates execution and feedback to ensure the generated transformations work as expected, leading to more reliable outcomes.

What This Means for You

This study is particularly beneficial for software engineers, developers, and those working on compiler optimisations or legacy code refactoring. By using this method, teams can reduce the time spent on manual code review and debugging, while improving the precision of code transformations.

Examining the Details

The study's methodology involved testing 16 different Python code transformations across a variety of tasks, ranging from simple operations like constant folding to more complex transformations such as converting dot products to PyTorch API calls. The CTT method achieved an overall F1 score of 0.97, compared to the 0.75 achieved by the direct rewriting method. The precision of transformations ranged from 93% to 100%, with tasks like dead code elimination and redundant function elimination reaching near-perfect performance. In contrast, the traditional direct LLM rewriting approach showed an average precision of 60%, and was prone to more frequent errors, requiring manual correction.

You can learn more by reading the entire paper.

🧠 Expert insight💥

Here’s an excerpt from “Chapter 7: Visualizing Text Data” in the book, Python Natural Language Processing Cookbook - Second Edition by Zhenya Antić and Saurabh Chakravarty, published in September 2024.

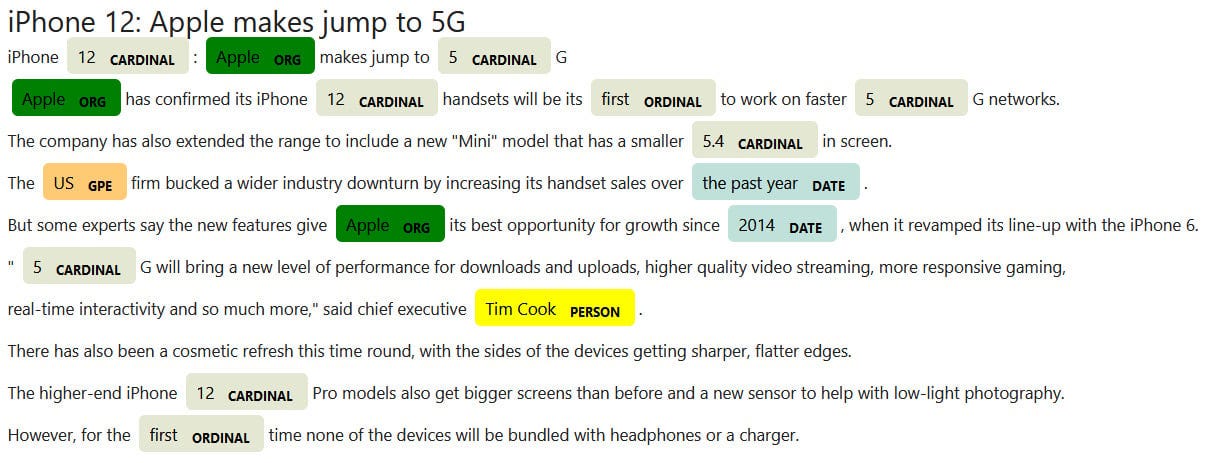

Visualizing NER

Named entity recognition, or NER, is a very useful tool for quickly finding people, organizations, locations, and other entities in texts. In order to visualize them better, we can use the displacy package to create compelling and easy-to-read images.

After working through this recipe, you will be able to create visualizations of named entities in a text using different formatting options and save the results in a file.

Getting ready

The displaCy library is part of the spacy package. You need at least version 2.0.12 of the spacy package for displaCy to work. The version in the poetry environment and requirements.txt file is 3.6.1.

The notebook is located at https://github.com/PacktPublishing/Python-Natural-Language-Processing-Cookbook-Second-Edition/blob/main/Chapter07/7.3_ner.ipynb.

How to do it...

We will use spacy to parse the sentence and then the displacy engine to visualize the named entities:

Import both

spacyanddisplacy:

import spacy

from spacy import displacy

Run the language utilities file:

%run -i "../util/lang_utils.ipynb"

Define the text to process:

text = """iPhone 12: Apple makes jump to 5G

Apple has confirmed its iPhone 12 handsets will be its first to work on faster 5G networks.

The company has also extended the range to include a new "Mini" model that has a smaller 5.4in screen.

The US firm bucked a wider industry downturn by increasing its handset sales over the past year.

But some experts say the new features give Apple its best opportunity for growth since 2014, when it revamped its line-up with the iPhone 6.

"5G will bring a new level of performance for downloads and uploads, higher quality video streaming, more responsive gaming,

real-time interactivity and so much more," said chief executive Tim Cook.

There has also been a cosmetic refresh this time round, with the sides of the devices getting sharper, flatter edges.

The higher-end iPhone 12 Pro models also get bigger screens than before and a new sensor to help with low-light photography.

However, for the first time none of the devices will be bundled with headphones or a charger."""

In this step, we process the text using the small model. This gives us a

Docobject. We then modify the object to contain a title. This title will be part of the NER visualization:

doc = small_model(text)

doc.user_data["title"] = "iPhone 12: Apple makes jump to 5G"

Here, we set up color options for the visualization display. We set green for the

ORG-labeled text and yellow for thePERSON-labeled text. We then set theoptionsvariable, which contains the colors. Finally, we use therendercommand to display the visualization. As arguments, we provide theDocobject and the options we previously defined. We also set thestyleargument to"ent", as we would like to display just entities. We set thejupyterargument toTruein order to display directly in the notebook:

colors = {"ORG": "green", "PERSON":"yellow"}

options = {"colors": colors}

displacy.render(doc, style='ent', options=options, jupyter=True)

The output should look like that in Figure 7.4.

Figure 7.4 – Named entities visualization

Now we save the visualization to an HTML file. We first define the

pathvariable. Then, we use the samerendercommand, but we set thejupyterargument toFalsethis time and assign the output of the command to thehtmlvariable. We then open the file, write the HTML, and close the file:

path = "../data/ner_vis.html"

html = displacy.render(doc, style="ent",

options=options, jupyter=False)

html_file= open(path, "w", encoding="utf-8")

html_file.write(html)

html_file.close()

This will create an HTML file with the entities visualization.

Packt library subscribers can continue reading the entire book for free. You can buy Python Natural Language Processing Cookbook - Second Edition, here.

Get the eBook for $35.99 $17.99!

Get the Print Book for $44.99 $30.99!

And that’s a wrap.

We have an entire range of newsletters with focused content for tech pros. Subscribe to the ones you find the most useful here. The complete PythonPro archives can be found here.

If you have any suggestions or feedback, or would like us to find you a Python learning resource on a particular subject, take the survey or leave a comment below!

Hey, great read as always. The LLM part on code transformations is super intersting. What if this could lead to self-optimizing compilers adapting to specific hardware in real-time?