PythonPro #54: Global Forecasting Models, Python Overtakes JavaScript, and Hidden Python Libraries

Welcome to a brand new issue of PythonPro!

In today’s Expert Insight we bring you an excerpt from the recently published book, Modern Time Series Forecasting with Python - Second Edition, which explains the shift from traditional, isolated time series models to global forecasting models, which leverage related datasets to enhance scalability, accuracy, and reduce overfitting in large-scale applications.

News Highlights: Python has overtaken JavaScript on GitHub, driven by its role in AI and data science, per GitHub's Octoverse 2024 report; and IBM’s Deep Search team has released Docling v2, a Python library for document extraction with models on Hugging Face.

And, today’s Featured Study, introduces SafePyScript, a machine-learning-based tool developed by researchers at the University of Passau, Germany, for detecting vulnerabilities in Python code.

Stay awesome!

Divya Anne Selvaraj

Editor-in-Chief

🐍 Python in the Tech 💻 Jungle 🌳

🗞️News

Python has overtaken JavaScript on GitHub: GitHub’s Octoverse 2024 report reveals Python as the most popular language on GitHub, driven by its role in AI, data science, and machine learning. Jupyter Notebooks usage has also surged.

Docling: Document extraction Python library from the Deep Search team at IBM: IBM’s Deep Search team released Docling v2, an MIT-licensed Python library for document extraction with custom models available on Hugging Face.

💼Case Studies and Experiments🔬

Programmed differently? Testing for gender differences in Python programming style and quality on GitHub: The study confirms that programming style can predict gender but these differences do not impact code quality.

Tune your guitar with python: Demonstrates using Python’s

sounddeviceandmatplotlibmodules to create a real-time guitar tuner, where a live spectrogram identifies key bass guitar note frequencies for tuning, with a custom interface..

📊Analysis

Package compatibility tracker: Python 3.13 free-threading and subinterpreters: This compatibility tracker shows that 83% of the 500 most downloaded Python packages are compatible with Python 3.13’s new free-threading feature, while 73% support importing without GIL in Docker tests.

Hidden Python Libraries That Will Blow Your Mind: Introduces six powerful yet lesser-known Python libraries including Streamlit for quick app-building, PyWhatKit for task automation, and Typer for simplified CLIs.

🎓 Tutorials and Guides 🤓

Python threading and subprocesses explained: Details Python’s threading and multiprocessing methods to handle parallel tasks, using thread/process pools for I/O-bound and CPU-bound tasks.

Tiny GraphRAG in 1000 lines of Python: introduces a simplified, local implementation of the GraphRAG algorithm, using a graph-based structure to enhance RAG for more contextually aware information retrieval.

Building AI chatbots with marimo: Covers how to create adaptable chatbots capable of sophisticated tasks, including visualizing data and processing diverse inputs.

Explanation of Vision Transformer with implementation: Provides an in-depth explanation and step-by-step implementation of Vision Transformer, covering key concepts such as embedding patches with code examples.

PyD-Net: Advancing Real-Time Depth Estimation for Mobile and Embedded Devices: Showcases PyD-Net's practical applications across autonomous navigation, augmented reality, assistive technology, and design.

Explore Solvable and Unsolvable Equations with Python: Delves into solving equations in Python, discussing when closed-form solutions are feasible and when numerical methods become necessary.

Books are Datasets: Mapping 12 Sacred Texts with Python and D3.js: Explores using Python and D3.js to analyze and visualize 12 major sacred texts as datasets, showcasing text-processing techniques to reveal connections and patterns within and between these religious texts.

🔑Best Practices and Advice🔏

Variables in Python: Usage and Best Practices: Covers variable creation, dynamic typing, expressions, and best practices for naming and using variables in various scopes along with parallel assignments, and iterable unpacking.

The Python Square Root Function: Details Python’s

sqrt()function from the math module, explaining its use for calculating square roots of positive numbers and zero, while raising errors for negative inputs.Python Closures: Common Use Cases and Examples: Explains Python closures, inner functions that capture variables from their surrounding scope, enabling state retention, function-based decorators, and encapsulation.

Python ellipses considered harmful: Argues that using ellipses (

...) to declare unimplemented methods in Python’s abstract classes can lead to hidden errors, and advocates forraise NotImplementedErrorinstead.ChatGPT-4o cannot run proper Generalized Additive Models currently— but it can correctly interpret results from R: Highlights limitations of ChatGPT-4o in advanced statistical modeling, informing Python users about workarounds and considerations when working with similar tools.

🔍Featured Study: SafePyScript💥

In "SafePyScript: A Web-Based Solution for Machine Learning-Driven Vulnerability Detection in Python," Farasat et al., researchers from the University of Passau,Germany, introduce SafePyScript, a machine-learning-based web tool designed to detect vulnerabilities in Python code.

Context

In software development, identifying vulnerabilities is a major concern due to the security risks posed by cyberattacks. Vulnerabilities, or flaws in code that can be exploited by attackers, require constant detection and correction. Traditionally, vulnerability detection relies on:

>Static Analysis: This rule-based approach scans code for known vulnerability patterns but often results in high false positives.

>Dynamic Analysis (Penetration Testing): This approach tests code in a runtime environment, relying on security experts to simulate potential attacks, making it resource-heavy and dependent on professional expertise.

Machine learning offers a data-driven alternative, enabling automated vulnerability detection with improved accuracy. Despite its popularity, Python lacks dedicated machine-learning-based tools for this purpose, which SafePyScript aims to provide. SafePyScript leverages a specific machine learning model, BiLSTM (Bidirectional Long Short-Term Memory), and the ChatGPT API to not only detect but also propose secure code, addressing this gap for Python developers.

Key Features of SafePyScript

BiLSTM Model for Vulnerability Detection: Trained on word2vec embeddings, this model has achieved an accuracy of 98.6% and ROC of 99.3% for Python code vulnerabilities.

Integration with ChatGPT API: SafePyScript uses ChatGPT (Turbo 3.5) to analyse and generate secure alternatives for vulnerable code.

Common Vulnerabilities Addressed: These include SQL Injection, Cross-Site Scripting (XSS), Remote Code Execution, Cross-Site Request Forgery (XSRF), and Open Redirect.

User-Friendly Interface: Built using Django (backend) and HTML, CSS, and JavaScript with Ajax (frontend) for a responsive, accessible user experience.

Report Generation: Users can download detailed reports on vulnerabilities detected in their code, making it easier to track and resolve issues systematically.

Feedback Mechanism: Users can provide feedback, allowing for tool improvement and adaptation to new security threats.

What This Means for You

SafePyScript is most useful for Python developers and software engineers who need an efficient way to detect vulnerabilities in their code without relying on traditional, labour-intensive methods. Its machine-learning foundation and integration with ChatGPT make it highly practical for real-world application, providing not only insights into code vulnerabilities but also generating secure code alternatives.

Examining the Details

SafePyScript’s effectiveness rests on a robust BiLSTM model. This model, using word2vec embeddings, achieved an impressive 98.6% accuracy, 96.2% precision, and 99.3% ROC in vulnerability detection. The researchers optimised the BiLSTM’s hyperparameters—such as a learning rate of 0.001 and a batch size of 128—through rigorous testing, achieving reliable results as benchmarks.

Additionally, SafePyScript leverages ChatGPT’s language model to generate secure code alternatives. The research team implemented precise prompt engineering to maximise ChatGPT’s effectiveness in analysing Python code vulnerabilities, further supporting the tool’s usability.

SafePyScript’s frontend design, built with HTML, CSS, JavaScript (with Ajax), and a Django backend, ensures a smooth user experience. This structure allows developers to log in, upload or import code, select detection models, review reports, and access secure code—all within an intuitive, accessible platform.

You can learn more by reading the entire paper or accessing SafePyScript.

🧠 Expert insight💥

Here’s an excerpt from “Chapter 6: Time Series Forecasting as Regression” in the book, Modern Time Series Forecasting with Python - Second Edition by Manu Joseph and Jeffrey Tackes, published in October 2024.

Global forecasting models – a paradigm shift

Traditionally, each time series was treated in isolation. Because of that, traditional forecasting has always looked at the history of a single time series alone in fitting a forecasting function. But recently, because of the ease of collecting data in today's digital-

first world, many companies have started collecting large amounts of time series from similar sources, or related time series.

For example, retailers such as Walmart collect data on sales of millions of products across thousands of stores. Companies such as Uber or Lyft collect the demand for rides from all the zones in a city. In the energy sector, energy consumption data is collected across all consumers. All these sets of time series have shared behavior and are hence called related time series.

We can consider that all the time series in a related time series come from separate data generating processes (DGPs), and thereby model them all separately. We call these the local models of forecasting. An alternative to this approach is to assume that all the time series are coming from a single DGP. Instead of fitting a separate forecast function for each time series individually, we fit a single forecast function to all the related time series. This approach has been called global or cross-learning in literature.

The terminology global was introduced by David Salinas et al. in the DeepAR paper and Cross-learning by Slawek Smyl.

...having more data will lead to lower chances of overfitting and, therefore, lower generalization error (the difference between training and testing errors). This is exactly one of the shortcomings of the local approach. Traditionally, time series are not very long, and in many cases, it is difficult and time-consuming to collect more data as well. Fitting a machine learning model (with all its expressiveness) on small data is prone to overfitting. This is why time series models that enforce strong priors were used to forecast such time series, traditionally. But these strong priors, which restrict the fitting of traditional time series models, can also lead to a form of underfitting and limit accuracy.

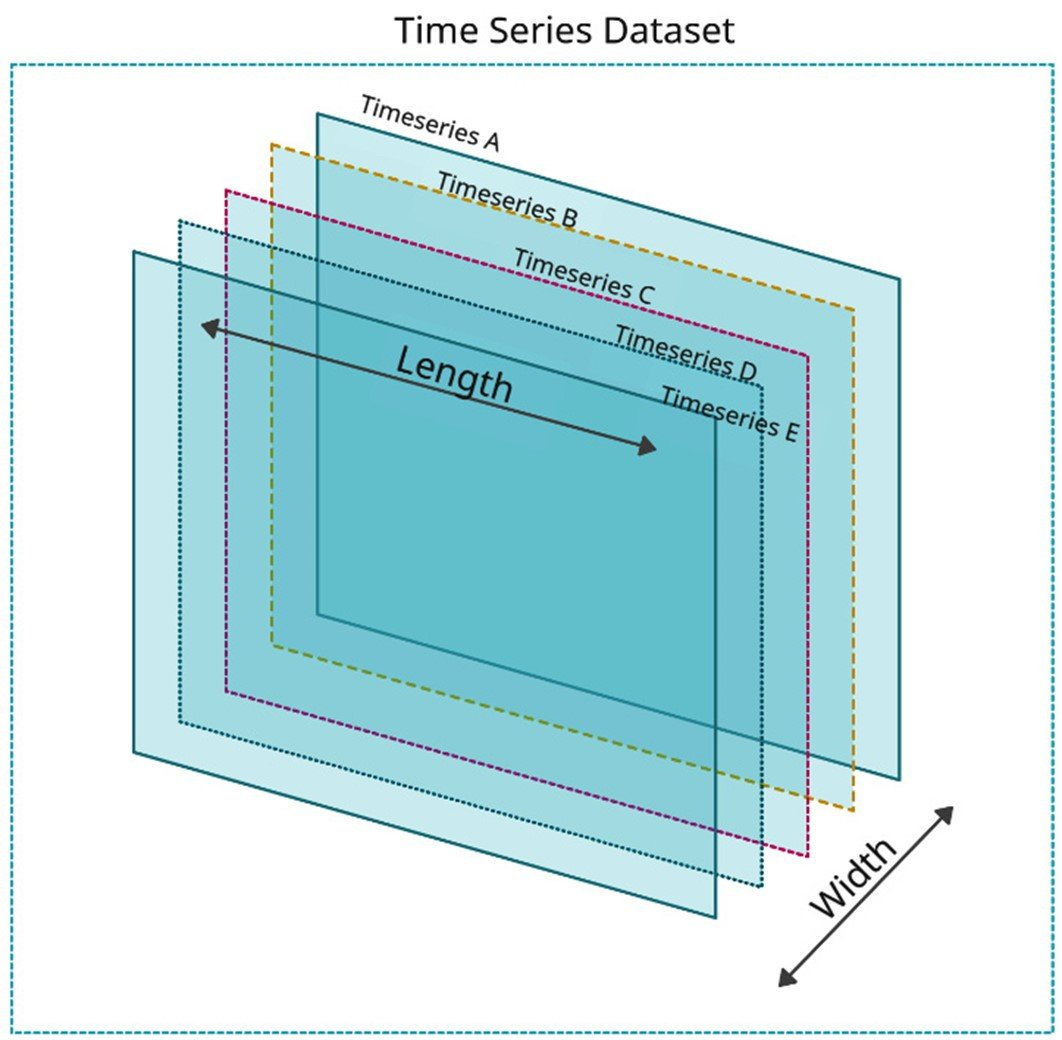

Strong and expressive data-driven models, as in machine learning, require a larger amount of data to have a model that generalizes to new and unseen data. A time series, by definition, is tied to time, and sometimes, collecting more data means waiting for months or years and that is not desirable. So, if we cannot increase the length of the time-series dataset, we can increase the width of the time series dataset. If we add multiple time series to the dataset, we increase the width of the dataset, and there by increase the amount of data the model is getting trained with. Figure 5.7 shows the concept of increasing the width of a time series dataset visually:

Figure 5.7 – The length and width of a time series dataset

This works in favor of machine learning models because with higher flexibility in fitting a forecast function and the addition of more data to work with, the machine learning model can learn a more complex forecast function than traditional time series models, which are typically shared between the related time series, in a completely data-driven way.

Another shortcoming of the local approach revolves around scalability. In the case of Walmart we mentioned earlier, there are millions of time series that need to be forecasted and it is not possible to have human oversight on all these models. If we think about this from an engineering perspective, training and maintaining millions of models in a production system would give any engineer a nightmare. But under the global approach, we only train a single model for all these time series, which drastically reduces the number of models we need to maintain and yet can generate all the required forecasts.

This new paradigm of forecasting has gained traction and has consistently been shown to improve the local approaches in multiple time series competitions, mostly in datasets of related time series. In Kaggle competitions, such as Rossman Store Sales (2015), Wikipedia WebTraffic Time Series Forecasting (2017), Corporación Favorita Grocery Sales Forecasting (2018), and M5 Competition (2020), the winning entries were all global models—either machine learning or deep learning or a combination of both. The Intermarché Forecasting Competition (2021) also had global models as the winning submissions. Links to these competitions are provided in the Further reading section.

Although we have many empirical findings where the global models have outperformed local models for related time series, global models are still a relatively new area of research. Montero-Manson and Hyndman (2020) showed a few very interesting results and showed that any local method can be approximated by a global model with required complexity, and the most interesting finding they put forward is that the global model will perform better, even with unrelated time series. We will talk more about global models and strategies for global models in Chapter 10, Global Forecasting Models.

Modern Time Series Forecasting with Python - Second Edition was published in October 2024.

Get the eBook for $46.99 $31.99!

Get the Print Book for $57.99!

And that’s a wrap.

We have an entire range of newsletters with focused content for tech pros. Subscribe to the ones you find the most useful here. The complete PythonPro archives can be found here.

If you have any suggestions or feedback, or would like us to find you a Python learning resource on a particular subject, just respond to this email!