PythonPro #55: Python Project Packaging Guide, AWS Credentials Theft Alert, and PyTorch 2 Speeds Up ML

Welcome to a brand new issue of PythonPro!

In today’s Expert Insight we bring you an excerpt from the recently published book, LLM Engineer's Handbook, which discusses comprehensive RAG evaluation through the Ragas and ARES frameworks.

News Highlights: Malicious Python package "Fabrice" on PyPI has been stealing AWS credentials by mimicking Fabric; and PyTorch 2 boosts ML speeds with dynamic bytecode transformation, achieving 2.27x inference and 1.41x training speedups on NVIDIA A100 GPUs.

My top 5 picks from today’s learning resources:

And, today’s Featured Study, introduces Magentic-One, a generalist multi-agent AI system developed by Microsoft Research, designed to coordinate specialised agents in tackling complex, multi-step tasks across diverse applications.

Stay awesome!

Divya Anne Selvaraj

Editor-in-Chief

P.S.: This month's survey is now live. Do take the opportunity to leave us your feedback, request a learning resource, and earn your one Packt credit for this month.

Python in the Tech Jungle

News

Malicious Python package collects AWS credentials via 37,000 downloads: The malicious Python package "Fabrice" has been typosquatting on PyPI since 2021, gathering AWS credentials by imitating the popular Fabric SSH library.

PyTorch 2 Speeds Up ML with Dynamic Python Bytecode Transformation and Graph Compilation: Fully backward-compatible, the version achieves a 2.27x inference speedup and 1.41x training speedup on NVIDIA A100 GPUs, surpassing six other compilers across 180+ models.

Case Studies and Experiments

LangGraph for Healthcare: A Comprehensive Technical Guide: Delves into the technical aspects of integrating LangGraph into healthcare workflows, emphasizing its potential to improve patient care, streamline administrative tasks, and facilitate medical research

Timing-sensitive analysis in python: explores timing sensitivity in Python through a hands-on approach, testing timing stability under different conditions (like input size and intentional delay).

State of Python 3.13 Performance: Free-Threading: Examines how free-threading affects Python's ability to handle parallel tasks, particularly through a practical example with a PageRank algorithm implementation.

Modular, Faster DateOnly Operations in Python: Delves into the reasoning behind memory-efficient, modular date handling and performance optimization, examining different approaches and technologies like C structures.

Tutorials and Guides

How to Reset a pandas DataFrame Index: Covers methods like

.reset_index(),.index, and.set_axis()while exploring index alignment, duplicate removal, multi-index handling, and using columns as indexes.A Comprehensive Guide to Python Project Management and Packaging: Covers structuring projects, managing dependencies, and creating distributable packages, along with practical examples.

Effortless Image Cropping with Python: Automate Your Workflow in Minute: Shows you how to automate image cropping in Python using OpenCV to identify the focal area of an image and crop it to a 16:9 aspect ratio.

Adding keyboard shortcuts to the Python REPL: Explains how to add custom keyboard shortcuts to the Python 3.13 REPL using a

PYTHONSTARTUPfile and the unsupported_pyreplmodule.Simplifying News Scraping with Python’s Newspaper4k Library: Demonstrates how to use Python’s Newspaper4k library to automate the scraping and parsing of news articles, extracting key components.

Principal Component Analysis with Python (A Deep Dive) -Part 1: Provides a step-by-step guide for reducing data dimensionality through mathematical and coding examples.

Using the OpenAI Reatime API in python: Covers key challenges such as managing 24kHz, 16-bit audio format, avoiding jerky audio by handling recording and playback concurrently, and preventing echo by using a headset.

Best Practices and Advice

How to Fix the Most Common Python Coding Errors: Covers IndentationError, SyntaxError, TypeError (

NoneTypenot subscriptable), IndexError (list index out of range), and KeyError (missing dictionary key).Do Constructors Return Values in OOP?: Clarifies that while constructors don’t explicitly return values, they implicitly return the newly created instance of the class, fulfilling their primary purpose of object initialization.

20 Python scripts to automate common daily tasks: Lists scripts for tasks such as sending emails, scraping news, downloading stock prices, backing up files, posting to social media, fetching weather updates, and resizing images.

What time is it? A simple approach to AI-agents: Explains how AI agents solve real-time queries by selecting and executing pre-defined functions, using tasks like fetching the current time and weather as examples.

How I Got Started Making Maps with Python and SQL: Recounts the author’s journey into spatial data visualization using tools like DuckDB, H3, and GeoPandas to create interactive maps, from building density to 3D dashboards.

Featured Study: Magentic-One

In "Magentic-One: A Generalist Multi-Agent System for Solving Complex Tasks," Fourney et al. from AI Frontiers - Microsoft Research aim to develop a versatile, multi-agent AI system capable of autonomously completing complex tasks. The study presents Magentic-One as a generalist solution that orchestrates specialised agents to tackle tasks that require planning, adaptability, and error recovery.

Context

To address the need for AI systems capable of handling a wide range of tasks, Magentic-One leverages a multi-agent architecture. In this setup, agents are AI-driven components, each with a distinct skill, such as web browsing or code execution, all working under the direction of an Orchestrator agent. The Orchestrator not only delegates tasks but monitors and revises strategies to keep progress on track, ensuring effective task completion. This system responds to the growing demand for agentic systems in AI—those able to handle tasks involving multiple steps, real-time problem-solving, and error correction.

The importance of such systems has increased as AI technology advances in areas like software development, data analysis, and web-based research, where single-agent models often struggle with multi-step, unpredictable tasks. By developing Magentic-One as a generalist system, the researchers offer a foundation that balances adaptability and reliability across diverse applications, helping establish future standards for agentic AI systems.

Key Features of Magentic-One

Multi-Agent Architecture: Magentic-One uses multiple agents, each specialising in a specific task, coordinated by an Orchestrator agent.

Orchestrator-Led Dynamic Planning: The Orchestrator creates and adapts task plans, tracks progress, and initiates corrective actions as needed, improving resilience.

Specialised Agents:

Coder: Writes, analyses, and revises code.

ComputerTerminal: Executes code, manages shell commands.

WebSurfer: Browses the web, interacts with web pages.

FileSurfer: Reads and navigates files of various types.

Performance on Benchmarks: Magentic-One achieved high performance on challenging benchmarks like GAIA (38% completion rate) and AssistantBench (27.7% accuracy), positioning it competitively among state-of-the-art systems.

AutoGenBench Tool for Evaluation: AutoGenBench offers a controlled testing environment, allowing for repeatable, consistent evaluation of agentic systems like Magentic-One.

What This Means for You

The study’s findings are particularly relevant for developers, researchers, and AI practitioners focused on real-world applications of AI for complex, multi-step tasks. For instance, fields such as autonomous software engineering, data management, and digital research can leverage Magentic-One's multi-agent system to automate complex workflows. Its modular, open-source design enables further adaptation, making it useful for those interested in customising AI tools to meet specific requirements or studying multi-agent coordination for diverse scenarios.

Examining the Details

The researchers applied a rigorous methodology to assess Magentic-One's reliability and practical value. Key benchmarks included GAIA, AssistantBench, and WebArena, each with unique tasks requiring multi-step reasoning, data handling, and planning. To verify the system’s efficacy, Magentic-One’s performance was compared against established state-of-the-art systems. The study reports a 38% task completion rate on GAIA, positioning Magentic-One competitively among leading systems without modifying core agent capabilities.

To analyse the system’s interactions and address limitations, the team examined errors in detail, identifying recurring issues such as repetitive actions and insufficient data validation. By tracking these errors and using AutoGenBench, an evaluation tool ensuring isolated test conditions, the researchers provided a clear, replicable performance baseline. Their approach underscores the importance of modularity in AI design, as Magentic-One's agents operated effectively without interfering with each other, demonstrating both reliability and extensibility.

You can learn more by reading the entire paper or access the system here.

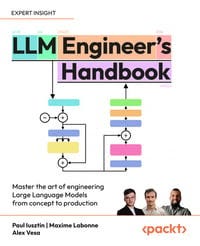

Expert insight

Here’s an excerpt from “Chapter 7: Evaluating LLMs” in the book, LLM Engineer's Handbook by Paul Iusztin and Maxime Labonne, published in October 2024.

RAG evaluation

While traditional LLM evaluation focuses on the model’s inherent capabilities, RAG evaluation requires a more comprehensive approach that considers both the model’s generative abilities and its interaction with external information sources.

RAG systems combine the strengths of

LLMs with information retrieval mechanisms, allowing them to generate responses that are not only coherent and contextually appropriate but also grounded in up-to-date, externally sourced information. This makes RAG particularly valuable in fields where current and accurate information is crucial, such as news reporting, research, and customer support.

The evaluation of RAG systems goes beyond assessing a standalone LLM. It requires examining the entire system’s performance, including:

Retrieval accuracy: How well does the system fetch relevant information?

Integration quality: How effectively is the retrieved information incorporated into the generated response?

Factuality and relevance: Does the final output address the query appropriately while seamlessly blending retrieved and generated content?

Key metrics for RAG evaluation include retrieval precision and recall, which measure the accuracy and comprehensiveness of the retrieved information. Additionally, the quality of integration between retrieved data and generated text is crucial, as is the overall factuality and coherence of the output.

To illustrate how these metrics are applied in practice, consider a RAG system designed for a customer support chatbot in an e-commerce setting. In this scenario, the user asks “What’s your return policy for laptops purchased during the holiday sale?” The RAG pipeline finds relevant documents on the electronics return policy and documents on holiday sale terms. This additional context is appended at the end of the question, and the model uses it to respond:

For laptops purchased during our holiday sale, you have an extended return period of 60 days from the date of purchase. This is longer than our standard 30-day return policy for electronics. Please ensure the laptop is in its original packaging with all accessories to be eligible for a full refund.

Table 7.3: Example of output from a RAG pipeline designed for customer support

In this pipeline, we can evaluate if the retrieved documents correspond to what was expected (retrieval accuracy). We can also measure the difference between responses with and without additional context (integration quality). Finally, we can assess whether the output is relevant and grounded in the information provided by the documents (factuality and relevance).

In this section, we will cover two methods to evaluate how well RAG models incorporate external information into their responses.

Ragas

Retrieval-Augmented Generation Assessment (Ragas) is an open-source toolkit designed to provide developers with a comprehensive set of tools for RAG evaluation and optimization. It’s designed around the idea of metrics-driven development (MDD), a product development approach that relies on data to make well-informed decisions, involving the ongoing monitoring of essential metrics over time to gain valuable insights into an application’s performance. By embracing this methodology, Ragas enables developers to objectively assess their RAG systems, identify areas for improvement, and track the impact of changes over time.

One of the key capabilities of Ragas is its ability to synthetically generate diverse and complex test datasets. This feature addresses a significant pain point in RAG development, as manually creating hundreds of questions, answers, and contexts is both time-consuming and labor-intensive. Instead, it uses an evolutionary approach paradigm inspired by works like Evol-Instruct to craft questions with varying characteristics such as reasoning complexity, conditional elements, and multi-context requirements. This approach ensures a comprehensive evaluation of different components within the RAG pipeline.

Additionally, Ragas can generate conversational samples that simulate chat-based question-and-follow-up interactions, allowing developers to evaluate their systems in more realistic scenarios.

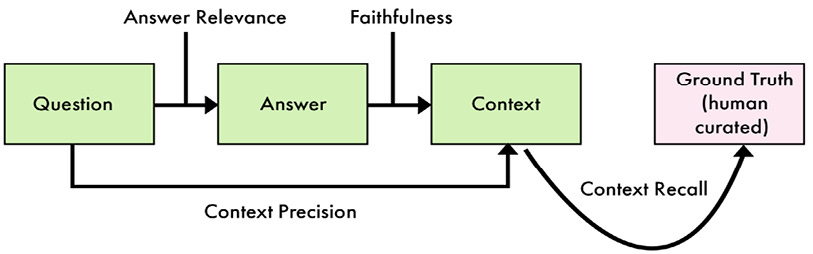

Figure 7.1: Overview of the Ragas evaluation framework

As illustrated in Figure 7.1, Ragas provides a suite of LLM-assisted evaluation metrics designed to objectively measure different aspects of RAG system performance. These metrics include:

Faithfulness: This metric measures the factual consistency of the generated answer against the given context. It works by breaking down the answer into individual claims and verifying if each claim can be inferred from the provided context. The faithfulness score is calculated as the ratio of verifiable claims to the total number of claims in the answer.

Answer relevancy: This metric evaluates how pertinent the generated answer is to the given prompt. It uses an innovative approach where an LLM is prompted to generate multiple questions based on the answer and then calculates the mean cosine similarity between these generated questions and the original question. This method helps identify answers that may be factually correct but off-topic or incomplete.

Context precision: This metric evaluates whether all the ground-truth relevant items present in the contexts are ranked appropriately. It considers the position of relevant information within the retrieved context, rewarding systems that place the most pertinent information at the top.

Context recall: This metric measures the extent to which the retrieved context aligns with the annotated answer (ground truth). It analyzes each claim in the ground truth answer to determine whether it can be attributed to the retrieved context, providing insights into the completeness of the retrieved information.

Finally, Ragas also provides building blocks for monitoring RAG quality in production environments. This facilitates continuous improvement of RAG systems. By leveraging the evaluation results from test datasets and insights gathered from production monitoring, developers can iteratively enhance their applications. This might involve fine-tuning retrieval algorithms, adjusting prompt engineering strategies, or optimizing the balance between retrieved context and LLM generation.

Ragas can be complemented with another approach, based on custom classifiers.

ARES

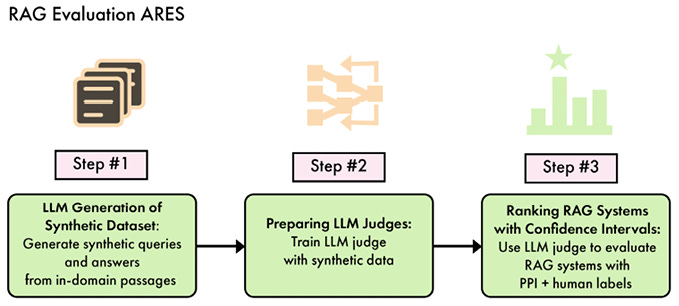

ARES (an automated evaluation framework for RAG systems) is a comprehensive tool designed to evaluate RAG systems. It offers an automated process that combines synthetic data generation with fine-tuned classifiers to assess various aspects of RAG performance, including context relevance, answer faithfulness, and answer relevance.

The ARES framework operates in three main stages: synthetic data generation, classifier training, and RAG evaluation. Each stage is configurable, allowing users to tailor the evaluation process to their specific needs and datasets.

In the synthetic data generation stage, ARES creates datasets that closely mimic real-world scenarios for robust RAG testing. Users can configure this process by specifying document file paths, few-shot prompt files, and output locations for the synthetic queries. The framework supports various pre-trained language models for this task, with the default being google/flan-t5-xxl. Users can control the number of documents sampled and other parameters to balance between comprehensive coverage and computational efficiency.

Figure 7.2: Overview of the ARES evaluation framework

The classifier training stage involves creating high-precision classifiers to determine the relevance and faithfulness of RAG outputs. Users can specify the classification dataset (typically generated from the previous stage), test set for evaluation, label columns, and model choice. ARES uses microsoft/deberta-v3-large as the default model but supports other Hugging Face models. Training parameters such as the number of epochs, patience value for early stopping, and learning rate can be fine-tuned to optimize classifier performance.

The final stage, RAG evaluation, leverages the trained classifiers and synthetic data to assess the RAG model’s performance. Users provide evaluation datasets, few-shot examples for guiding the evaluation, classifier checkpoints, and gold label paths. ARES supports various evaluation metrics and can generate confidence intervals for its assessments.

ARES offers flexible model execution options, supporting both cloud-based and local runs through vLLM integration. The framework also supports various artifact types (code snippets, documents, HTML, images, and so on), enabling comprehensive evaluation across different RAG system outputs.

In summary, Ragas and ARES complement each other through their distinct approaches to evaluation and dataset generation. Ragas’s strength in production monitoring and LLM-assisted metrics can be combined with ARES’s highly configurable evaluation process and classifier-based assessments. While Ragas may offer more nuanced evaluations based on LLM capabilities, ARES provides consistent and potentially faster evaluations once its classifiers are trained. Combining them offers a comprehensive evaluation framework, benefiting from quick iterations with Ragas and in-depth, customized evaluations with ARES at key stages.

LLM Engineer's Handbook was published in October 2024.

And that’s a wrap.

We have an entire range of newsletters with focused content for tech pros. Subscribe to the ones you find the most useful here. The complete PythonPro archives can be found here.

If you have any suggestions or feedback, or would like us to find you a Python learning resource on a particular subject, take the survey or leave a comment below!