PythonPro #57: NASA Image Pipeline in Airflow, PyPI Crypto Scare, and Numba vs. Cython

Welcome to a brand new issue of PythonPro!

In today’s Expert Insight we bring you an excerpt from the recently published book, Apache Airflow Best Practices, which explains how to build and test a pipeline in Jupyter Notebook to extract daily images from NASA's APOD API, store them locally, and prepare the workflow for automation using Apache Airflow.

News Highlights: PyPI's aiocpa updated with code to steal private keys via Telegram; AWS Lambda SnapStart now supports Python 3.12+ and .NET 8+ for faster startups; Eel simplifies Python/JS HTML GUI apps with async support; and Marimo raises $5M for an open-source reactive Python notebook.

My top 5 picks from today’s learning resources:

And, today’s Featured Study, introduces CODECLEANER, an open-source toolkit that employs automated code refactoring to mitigate data contamination in Code Language Models, significantly enhancing evaluation reliability across Python and Java through systematic and scalable techniques.

Stay awesome!

Divya Anne Selvaraj

Editor-in-Chief

P.S.: Thank you all who participated in this month's survey. With this issue, we have fulfilled all content requests made this month.

🐍 Python in the Tech 💻 Jungle 🌳

🗞️News

Python Crypto Library Updated to Steal Private Keys: The PyPI package aiocpa was updated with malicious code to exfiltrate private keys via Telegram, targeting crypto library users.

AWS Lambda now supports SnapStart for Python and .NET functions: This opt-in feature is ideal for latency-sensitive applications and is available for Python 3.12+ and .NET 8+ across several AWS regions.

Eel: For little HTML GUI applications, with easy Python/JS interop: The library simplifies development for utility scripts, supports asynchronous functions, and enables distribution through PyInstaller.

Marimo raises $5M to build an open-source reactive Python notebook: The Python notebook will be optimized for reproducibility, Git compatibility, script execution, and web app deployment.

💼Case Studies and Experiments🔬

Running Code from Strangers: Recounts the evolution of Livedocs' runtime architecture, from browser-based Pyodide to Kubernetes for scalability, security, and performance in running isolated, interactive Python-based documents.

Mach 1 with Python!: Details a fun DIY project using a Raspberry Pi, ultrasonic sensors, and Python to create a Mario pipe that plays a sound whenever someone passes through it.

📊Analysis

Numba vs. Cython: A Technical Comparison: Compares the two tools for optimizing Python performance, detailing their features, use cases, and benchmarking results to guide their practical application.

Is async django ready for prime time?: Details the setup required for fully async operations, such as using an ASGI server, async views, and an async ORM, while showcasing tools like django-ninja and aiohttp.

🎓 Tutorials and Guides 🤓

How to Iterate Through a Dictionary in Python: Explores various methods for iterating through Python dictionaries, including using

.items(),.keys(), and.values()methods for accessing keys, values, or key-value pairs.NumPy Practical Examples: Useful Techniques: Demonstrates advanced NumPy techniques, including creating multidimensional arrays from file data, handling duplicates, and reconciling datasets with structured arrays.

Build generative AI applications on Amazon Bedrock with the AWS SDK for Python (Boto3): Focuses on accessing and integrating foundation models into applications without managing infrastructure.

Python range(): Represent Numerical Ranges: Covers Python's

range()function, explaining its use for generating numerical sequences for loops, defining intervals with start, stop, and step parameters.A Complete Guide to Credit Risk Analysis With Python and Datalore AI: Utilizing Python and Datalore's AI-assisted coding capabilities, this guide demonstrates how to retrieve and process financial data, create visualizations, and perform statistical analyses to uncover patterns and insights.

Optimize Python with Nuitka: Introduces Nuitka, a Python-to-C++ compiler, detailing its features like performance optimization, compatibility, cross-platform support, and the creation of standalone executables.

NotebookLM-style podcasts with API in < 50 lines of Python code: A notebook-based tutorial leveraging the OpenAI API to generate a concise summary of the input text and then utilizes a text-to-speech (TTS) service to convert this summary into audio format.

Language Translation with Python: Introduces LibreTranslate, a lightweight, Python-based API server for language detection and translation, particularly useful for analyzing data in non-English languages.

🔑Best Practices and Advice🔏

The Code That Almost Led to Disaster • A Starbase Story: Narrates a fictional scenario where a Python import error nearly causes a catastrophic failure on a starbase, illustrating the critical importance of understanding Python's import system to prevent such issues.

Speed Up Your Python Program With Concurrency: Explains threading, asynchronous tasks, and multiprocessing and demonstrates how to optimize I/O-bound and CPU-bound tasks for improved performance.

Efficient String Concatenation in Python: Covers methods including using the

+and+=operators, the.join()method for lists, and tools likeStringIOfor handling large datasets, with best practices for performance and flexibility.Interacting With Python: Explores ways and provides guidance to interact with Python, including using the interactive REPL mode, running scripts from files via the command line, working in IDEs, and leveraging tools like Jupyter Notebooks.

Samuel Colvin on LogFire, mixing Python with Rust & being pydantic about types: This podcast episode features Samuel Colvin, who introduces LogFire, a new observability platform for Python, while exploring the increasing integration of Rust into Python’s ecosystem.

🔍Featured Study: CODECLEANER💥

In "CODECLEANER: Elevating Standards with a Robust Data Contamination Mitigation Toolkit," Cao et al. address the pervasive issue of data contamination in Code Language Models (CLMs). The study introduces CODECLEANER, an automated code refactoring toolkit designed to mitigate contamination, enabling more reliable performance evaluations for CLMs.

Context

Data contamination occurs when CLMs, trained on vast code repositories, inadvertently include test data, leading to inflated performance metrics. This undermines the credibility of CLMs in real-world applications, posing risks for software companies. Refactoring, a method of restructuring code without altering its functionality, offers a potential solution. However, the lack of automated tools and validated methods has hindered its adoption. CODECLEANER fills this gap by systematically evaluating refactoring operators for Python and Java code, ensuring they reduce contamination without semantic alterations.

Key Features of CODECLEANER

Automated Code Refactoring: CODECLEANER provides a fully automated solution for restructuring code, eliminating the need for manual intervention while preserving original code functionality.

Comprehensive Refactoring Operators: It includes 11 refactoring operators categorised into three distinct types catering to different aspects of code restructuring.

Syntactic Refactoring: Operators such as if-condition flipping, loop transformations, and iteration changes alter code structure without affecting its semantics, offering lightweight syntactic adjustments.

Semantic Refactoring: Advanced operators like identifier renaming and performance measurement decorators disrupt patterns that models memorise, significantly reducing overlap with training data.

Code Style Modifications: Adjustments such as naming style switches (e.g., camel case to snake case) and code normalisation (e.g., consistent formatting) ensure stylistic uniformity while mitigating contamination.

Cross-Language Functionality: While primarily designed for Python, CODECLEANER demonstrates adaptability by implementing selected operators in Java, addressing data contamination in a second language.

Scalable Application: The toolkit works on both small-scale (method-level) and large-scale (class-level) codebases, proving its utility across various levels of complexity.

Open Source and Accessible: CODECLEANER is available online, enabling widespread adoption and further research into mitigating data contamination in CLM evaluations.

What This Means for You

This study is particularly valuable for software developers and engineering teams seeking to integrate CLMs into production, researchers aiming to benchmark CLMs accurately, and organisations evaluating AI-based code tools. By addressing data contamination, CODECLEANER enhances the credibility and reliability of CLM-based solutions for real-world applications.

Examining the Details

The researchers evaluated CODECLEANER by applying 11 refactoring operators to Python and Java code at method-, class-, and cross-class levels. Effectiveness was measured using metrics like N-gram overlap and perplexity across over 7000 code snippets sampled from The Stack dataset. Four Code Language Models (CLMs), including StarCoder and CodeLlama, were used to assess changes in contamination severity.

Results showed that semantic operators, such as identifier renaming, reduced overlap by up to 39.3%, while applying all operators decreased overlap in Python code by 65%. On larger class-level Python codebases, contamination was reduced by 37%. Application to Java showed modest improvements, with the most effective operator achieving a 17% reduction.

You can learn more by reading the entire paper and accessing the toolkit here.

🧠 Expert insight💥

Here’s an excerpt from “Chapter 4: Basics of Airflow and DAG Authoring” in the Apache Airflow Best Practices by Dylan Intorf, Dylan Storey, and Kendrick van Doorn, published in October 2024.

Extracting images from the NASA API

This pipeline is designed to extract an image every day, store this information in a folder, and notify you of the completion. This entire process will be orchestrated by Apache Airflow and will take advantage of the scheduler to automate the function of re-running. As stated earlier, it is helpful to spend time

working through practicing this in Jupyter Notebook or another tool to ensure the API calls and connections are operating as expected and to troubleshoot any issues.

The NASA API

For this data pipeline, we will be extracting data from NASA. My favorite API is the Astronomy Picture of the Day (APOD) where a new photo is selected and displayed. You can easily change the API to another of interest, but for this example, I recommend you stick with the APOD and explore others once completed.

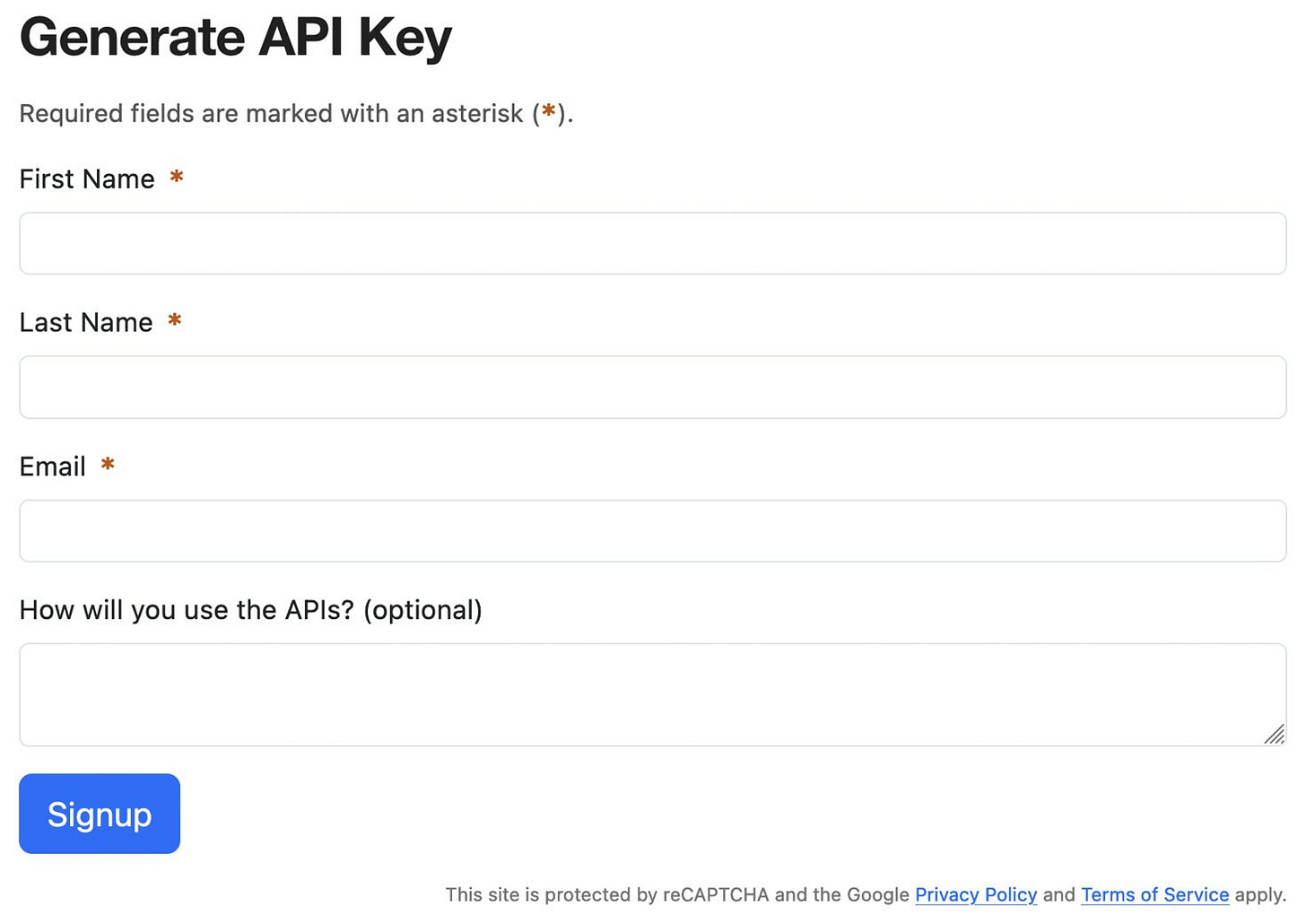

A NASA API key is required to start this next step:

Create a NASA API key (

https://api.nasa.gov/

).

Input your name, email, and planned functional use of the API.

Navigate to your email to locate the API key information.

Figure 4.3: NASA API Key input screenshot

Building an API request in Jupyter Notebook

With the environment configured and the API set up, we can begin authoring a DAG to automate this process. As a reminder, most Python code can be pre-tested in a system outside of Airflow, such as Jupyter Notebook or locally. If you are running into problems, it is recommended to spend time analyzing what the code is doing and work to debug.

In Jupyter Notebook, we are going to use the following code block to represent the function of calling the API, accessing the location of the image, and then storing the image locally. We will keep this example as simple as possible and walk through each step:

import requests

import json

from datetime import date

from NASA_Keys import api_key

url = f'https://api.nasa.gov/planetary/apod?api_key={api_key}'

response = requests.get(url).json()

response

today_image = response['hdurl']

r = requests.get(today_image)

with open(f'todays_image_{date.today()}.png', 'wb') as f:

f.write(requests.get(today_image).content)

The preceding code snippet is normally how we recommend starting any pipeline, ensuring that the API is functional, the API key works, and the current network requirements are in place to perform the procedures. It is best to ensure that the network connections are available and that no troubleshooting alongside the information security or networking teams is required.

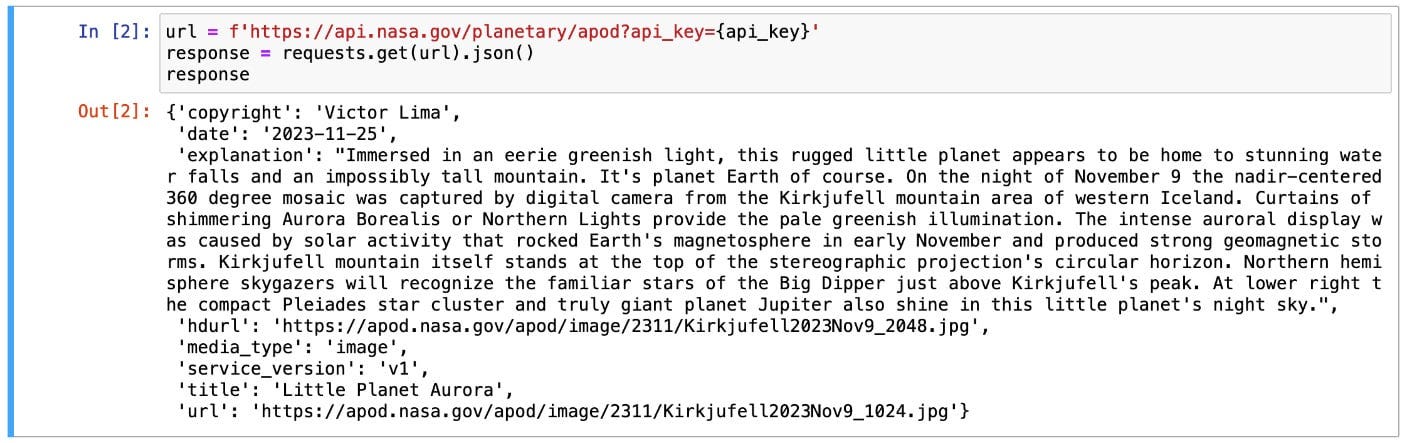

Here is how the code looks in our Jupyter Notebook environment:

We begin by importing the required libraries to support our code. These libraries include the following:

requests: A common Python library for making HTTP requests. It is an easy-to-use library that makes working with HTTP requests simple and allows for easy use ofGETandPOSTmethods.json: This library allows you to parse JSON from strings or files into a dictionary or list.datetime: This library provides the currentdateandtimeparameters. We will use this later on to title the image file.NASA_Keys: This is a local file to our machine holding theapi_keyparameter. This is used in this example to keep things as simple as possible and also mask the variable.

Figure 4.4: What your current Jupyter cell should look like

After importing the appropriate libraries and variables required, we construct a variable called

urlto house the HTTP request call including ourapi_keyvariable. This allows theapi_keyvariable to be included in the URL while hidden by a mask. It callsapi_keyfrom theNASA_Keysfile:

url = f'https://api.nasa.gov/planetary/apod?api_key={api_key}'

Next, we use the

requestslibrary to perform an HTTPGETmethod call on the URL that we have created. This calls on the API to send information for our program to interpret. Finally, we convert this information from theGETcall into JSON format. For our own understanding and analysis of the information being sent back, we print out the response to get a view of how the dictionary is structured. In this dictionary, it seems that there is only one level with multiple key-value pairs includingcopyright,date,explanation,hdurl,media_type,service_version,title, andurl:

Figure 4.5: Response from the NASA API call

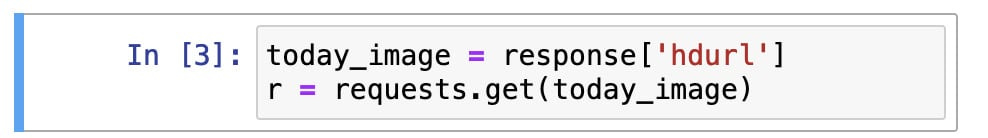

In the next step, we will utilize the hdurl key to access the URL associated with the high-definition astronomy image of the day. Since I am an enthusiast and want the highest quality image available, I have decided that the highest definition available meets my user needs. This is a great example of a time to determine whether your users desire or need the highest quality available or whether there is an opportunity to deliver a product that meets their needs at a lower cost or lower requirement of memory.

We store response['hdurl'] within the today_image variable for use in the next step for storing the image. This storing of hdurl allows for manipulation of the string later on in the next step:

Figure 4.6: Saving the hdurl response in a variable

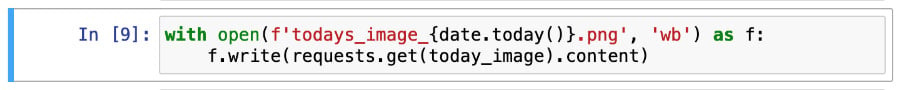

In the last step, we make use of

hdurland appenddate.today()to create a new name for the image each day. This is so that an image from yesterday does not have the same name as an image from today, thus reducing the risk of overwrites. There are additional ways to reduce the risk of overwrites, especially when creating an automated system, but this was chosen as the simplest option for our needs:

Figure 4.7: Writing the image content to a local file

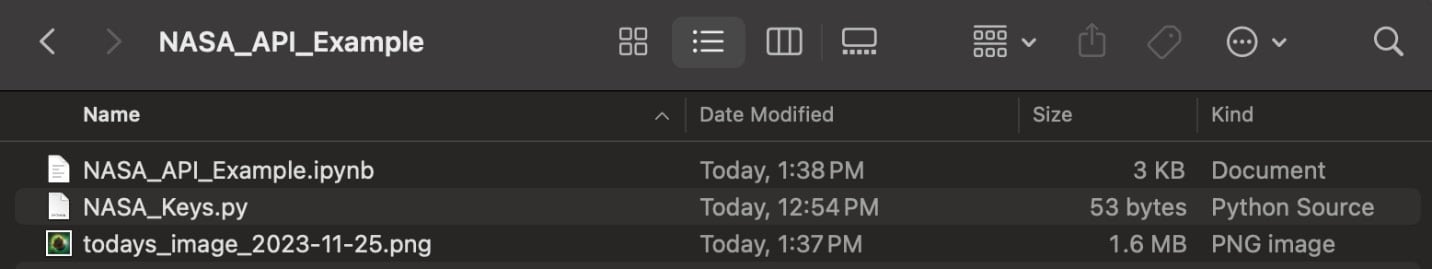

Finally, we can look in the local repository or folder and find that the image was stored there:

Figure 4.8: The image file we saved in the local repository or folder

This walk-through in Jupyter Notebook may seem ...excessive..., but taking the time to ensure the API is working and thinking through the logic of the common steps that need to be automated or repeated can be extremely beneficial when stepping into creating the Airflow DAG.

Apache Airflow Best Practices was published in October 2024.

Get the eBook for $35.99 $24.99

And that’s a wrap.

We have an entire range of newsletters with focused content for tech pros. Subscribe to the ones you find the most useful here. The complete PythonPro archives can be found here.

If you have any suggestions or feedback, or would like us to find you a Python learning resource on a particular subject, just leave a comment below!