PythonPro 🎄#59🥂: Training AI Models, YOLO Library Breach, and GPT for Scientific LaTeX

@{mv_date_MMM d, yyyy}@

Hi @{First Name}@,

Welcome to our very last issue for the year 2024! We will be taking our usual year end break and return again on the 14th of January 2025. In the meanwhile do keep an eye out. I've heard 🤫some irrestable deals from Packt will be coming your way soon! Now let's get to it.

In today’s Expert Insight we bring you an excerpt from the recently published book, AI Product Manager's Handbook, which discusses the process of training AI models for market readiness.

News Highlights: Ultralytics library, used for YOLO, hit by a GitHub Actions supply-chain attack; and python-build-standalone transitions to Astral for continued development.

My top 5 picks from today’s learning resources:

And, today’s Featured Study, delves into techniques and challenges in making AI models interpretable, emphasizing XAI's role in ethical and high-stakes applications like healthcare and finance.

Stay awesome!

And of course, as Charles Dickens wrote, may you feel "light as a feather🪶, ...happy as an angel😇,... merry as a schoolboy🕺....A merry Christmas 🎄to everybody! A happy New Year🥂 to all the world!"

Divya Anne Selvaraj

Editor-in-Chief

🐍 Python in the Tech 💻 Jungle 🌳

🗞️News

Popular Python AI library Ultralytics compromised with a crypto miner: The library, used for YOLO object detection, was compromised through a supply-chain attack exploiting GitHub Actions.

A new home for python-build-standalone: The project, initially developed by Gregory Szorc, which addresses challenges like dependency linking and slow source builds, will not be taken up by Astral.

💼Case Studies and Experiments🔬

From Code to Paper: Using GPT Models and Python to Generate Scientific LaTeX Documents: Explores the feasibility of using GPT models to automate the generation of structured LaTeX documents from Python algorithms, serving as a proof-of-concept.

The Black-Litterman Model: A smart integration of our market view: Explores the theoretical foundation, practical application, and benefits of the Black-Litterman model in portfolio optimization.

📊Analysis

Expression vs Statement in Python: What's the Difference?: Examines their differences through examples, including lambdas, assertions, and compound statements, while addressing practical relevance in day-to-day programming.

Typed Python in 2024: Well adopted, yet usability challenges persist: According to JetBrains, Meta, and Microsoft's survey on the state of Python typing, 88% of respondents frequently use types for benefits like enhanced IDE support, bug prevention, and better documentation.

🎓 Tutorials and Guides 🤓

When a Duck Calls Out • On Duck Typing and Callables in Python: Demonstrates how callables—objects with a

__call__()method—can enable flexible and polymorphic code by focusing on behavior over inheritance.Socket Programming in Python (Guide): Includes examples such as echo servers, multi-connection setups, and application-level protocols for real-world applications.

Expanding HUMS: Integrating Multi-Sensor Monitoring: Demonstrates expanding a Health and Usage Monitoring System (HUMS) by integrating multiple sensors and using FPGA-based tools for scalable data logging, clock synchronization, and enhanced monitoring capabilities.

Asynchronous Tasks With Django and Celery: Covers setting up Celery with Redis as a message broker, configuring tasks, and executing them independently from the main app flow.

Customising Pattern Matching Behaviour: Provides practical examples, including handling the end of iteration and publishing the approach as the

pattern-utilslibrary for extended functionality.How to Round Numbers in Python: Covers advanced rounding with the

decimalmodule, NumPy, and pandas, enabling precise control over data manipulation for specific tasks.PydanticAI: Pydantic AI Agent Framework for LLMs: Demonstrates how PydanticAI enables structured outputs, enforces type safety, and integrates seamlessly with LLMs for creating AI agents, using practical examples.

Linear Regression in Python: Demonstrates implementing simple, multiple, and polynomial regression in Python using libraries like scikit-learn and statsmodels, covering key concepts such as underfitting and overfitting.

🔑Best Practices and Advice🔏

Disposable environments for ad-hoc analyses: Introduces the

juvpackage, which embeds Python dependencies directly within Jupyter notebooks, eliminating the need for external environment files and enhancing reproducibility.Effective Python Testing With pytest: Introduces pytest, a Python testing framework, covering features like fixtures for managing dependencies, test parametrization to reduce redundancy, and detailed failure reports.

Django Signals: [Not] the Evil Incarnate You Think: Demystifies Django Signals, demonstrating transitioning from tightly coupled models to a signal-based architecture using custom signals and message data classes.

Negative Testing in Python Web Applications with pytest: Demonstrates testing invalid inputs, malformed requests, database failures, and rate limiting, alongside best practices like isolating scenarios, mocking dependencies, and ensuring comprehensive coverage.

Python for Security Engineers: Focuses on foundational skills like working with APIs (via the

requestslibrary), processing data formats (JSON, CSV, XML), file operations, writing detections, and building simple CLI tools and Flask apps.

🔍Featured Study: Explainable AI💥

In the paper, "A Comprehensive Guide to Explainable AI: From Classical Models to LLMs", Hsieh et al. explore the challenges and solutions in making machine learning (ML) models interpretable. The paper's goal is to provide a foundational understanding of explainable AI (XAI) techniques for researchers and practitioners.

Context

XAI aims to make AI systems transparent and understandable. Classical models, such as decision trees and linear regression, are naturally interpretable, whereas deep learning and LLMs are often considered "black-box" systems. This lack of transparency raises concerns in high-stakes applications, such as healthcare, finance, and policymaking, where accountability and fairness are critical. Tools like SHAP (Shapley Additive Explanations), LIME (Local Interpretable Model-agnostic Explanations), and Grad-CAM provide explanations for complex AI models. The study’s relevance lies in addressing the pressing need for trust and transparency in AI, particularly in ethical and regulatory contexts.

Key Recommendations

Enhance Explainability for Complex Models: Future research should focus on improving methods for understanding the internal mechanisms of Large Language Models (LLMs) using techniques like fine-grained attention visualisation and probing methods.

Combine Interpretability Approaches: Hybrid models that integrate intrinsic interpretability (e.g., Decision Trees) with post-hoc explanation tools like SHAP and LIME are recommended to balance accuracy and comprehensibility.

Promote User Interaction for Better Explanations: Develop interactive, human-in-the-loop systems to allow users to query and customise model explanations, fostering trust and usability.

Address Domain-Specific Needs: In fields like healthcare and finance, tailor explainability methods to meet high-stakes requirements. For instance, real-time explanations are crucial for clinicians using diagnostic models.

Integrate Legal Compliance: XAI methods must align with regulations like GDPR's "right to explanation" by offering clear and defensible explanations for automated decisions.

Develop Standards for Interpretability Evaluation: Address the lack of standardised metrics by creating frameworks that combine quantitative and qualitative evaluation methods to ensure reliable assessments of explainability.

Balance Transparency and Privacy: Techniques that enhance model transparency must also protect sensitive training data, particularly in models trained on personal datasets, to avoid privacy breaches.

What This Means for You

This study is useful for AI practitioners, data scientists, and decision-makers in fields like healthcare, finance, and policymaking. It provides practical tools and techniques, including SHAP and Grad-CAM, for improving model transparency and trust. The included Python code examples and resources enable direct application to real-world projects, making it a valuable guide for integrating explainability into high-stakes AI systems.

Examining the Details

The paper uses case studies in healthcare, finance, and policymaking to highlight practical uses of XAI.

You can learn more by reading the entire paper or accessing its code on GitHub.

🧠 Expert insight💥

Here’s an excerpt from “Chapter 2: Model Development and Maintenance for AI Products” in the book, AI Product Manager's Handbook by Irene Bratsis.

Training – when is a model ready for market?

In this section, we will explore the standard process for gathering data to train a model and tune hyperparameters optimally to achieve a certain level of performance and optimization. In the Implementation phase (step 4 of the NPD process), we’re looking for a level of performance that would be considered we’re looking for a level of

performance that would be considered optimal based on the Define phase (step 2 of the NPD process) before we move to the next phase of Marketing and crafting our message for what success looks like when using our product. A lot must happen in the Implementation phase before we can do that. Some of the key considerations are as follows:

Data accessibility is the most important factor when it comes to AI/ML products. At first, you might have to start with third-party data, which you’ll have to purchase, or public data that’s freely available or easily scraped. This is why you’ll likely want or need to partner with a few potential customers. Partnering with customers you can trust to stick with you and help you build a product that can be successful with real-world data is crucial to ending up with a product that’s ready for market. The last thing you want is to create a product based on pristine third-party datasets or free ones that then becomes overfitted to real-world data and performs poorly with data coming from your real customers that it’s never seen before.

Having a wide variety of data is important here, so in addition to making sure it’s real-world data, you also need to make sure that your data is representative of many types of users. Unless your product caters to very specific user demographics, you’re going to want to have a model trained on data that’s as varied as possible for good model performance as well as good usability ethics. There will be more on that in the final section.

The next key concept to keep in mind with regard to training ML models is minimizing the loss function. While training data is key, your loss function is going to determine how off from the mark your model is performing. The process of training is exactly that: using data and adjusting your models to optimize for how correct it is at predicting an output. The more incorrect it is, the higher your loss. The more correct it is, the more you’ve minimized your loss function. The more your machine learns (and practices) the better its chances of good performance.

Iterative hyperparameter tuning will also be hugely important as you continuously retrain your models for performance. One of the tools you have at your disposal, apart from changing/improving your training data, is adjusting the hyperparameters of your model. Note that not all models have hyperparameters to tune but most do. Models like linear regression models do have coefficients that can change, they are not at the discretion of the engineer. In contrast, deep learning models have the most hyperparameters and this is a big part of their training process.

The performance metrics and benchmarks in the Define phase (step 2 of the NPD) will inform how your ML engineers will go about tuning their hyperparameters. Most of the time, we don’t yet know what the optimal model architecture for a certain use case is. We want to explore how a model functions with various datasets and start somewhere so that we can see which hyperparameters give us superior performance.

Examples of what hyperparameters do include the degree of features that should be used in a linear model, the maximum depth that should be allowed for a decision tree model, how many trees should be included in a random forest model, or how many neurons or layers should be included for a neural network layer. In all these cases, we’re looking at the external settings of the model itself and all these settings are worthy of scrutiny based on the model performance they produce. Having competent AI/ML engineers who are comfortable with navigating these shifts in performance will be important in creating a product that’s set up for success.

We want to go into some applied examples of models and their comparisons to give product managers out there who are unfamiliar with AI/ML performance benchmarks a sense of how you can go about evaluating whether one model is better than another. The following are a few examples of performance metrics that your ML engineers will look at as they evaluate whether or not they’re using optimal models. Note that not using optimal models could come with significant engineering and financial costs from the need to correct mistakes, including time and computational resources to redevelop and retrain your models.

You’ll notice some of the names are familiar from our previous list of model types:

Note:

These comparisons were done on a personal project, which was a model we had created to predict the price of Ether, a form of cryptocurrency. If you’d like to see the entire project outlined, you can do so here.

The first model we wanted to use was an ordinary least squares (OLS) regression model because this is the most straightforward of the linear regression models that we wanted to select to give us a good baseline before we approached other model types.

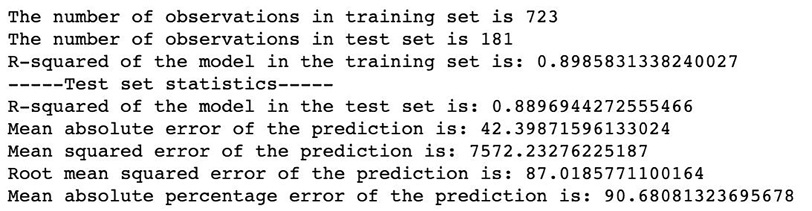

The results of the OLS regression model are as follows:

Figure 2.2 – OLS regression model results

In Chapter 1, we discussed the notion of performance metrics for ML models and how to track them. There are a number of metrics that are automatically generated when you train a model. In the example above, we see what the full list of available metrics looks like when you run a model. For our comparison, we will be focusing on the R-squared of the model in the test set line in Figure 2.2 to get the rate of error that’s comparable between models. The R-squared metric is also referred to as the “coefficient of determination” and the reason why we use this particular metric so often in regression models is that it best assesses how far the data lies from the fitted regression line that the regression model creates. With the preceding OLS regression model, we see an R-squared of 0.889 for the test set using an 80/20 split of the training data. We used 80% of the data for training and the remaining 20% of the data for testing.

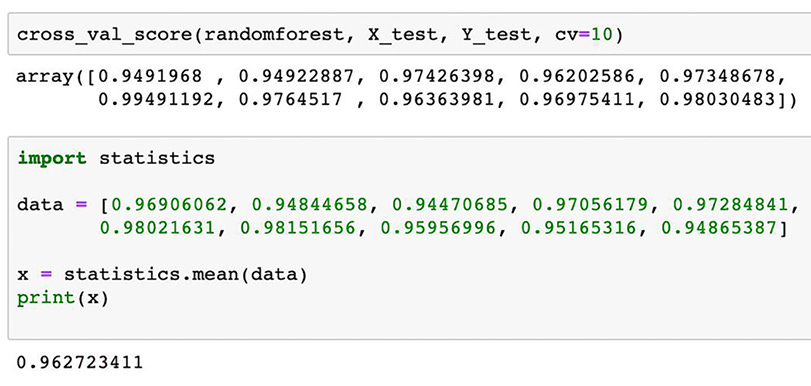

The next model we tested was a random forest to compare results with a tree-based model. One of our hyperparameters for this random forest example was setting our cross-validation to

10so that it would run through the training 10 times and produce an average of those 10 iterations as a final score. That average was an R-squared of 0.963, higher than our OLS model!

The results of the random forest model are as follows:

Figure 2.3 – Random forest model results

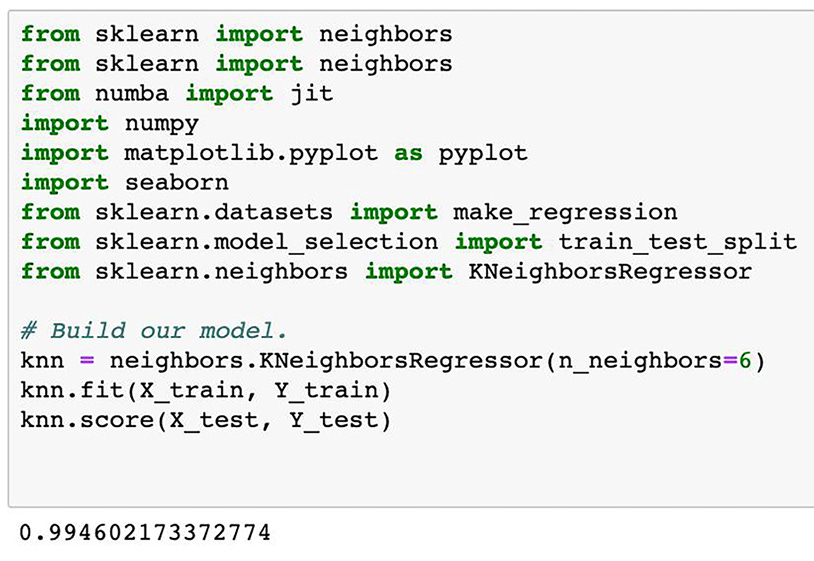

Finally, the last comparison was with our KNN model, which produced a score of 0.994. The hyperparameter we chose in this model was 6, which means we are looking for a group of 6 neighbors for each grouping. This KNN model gives us our best performance because we’re ideally looking for the closest we can get to a perfect score of 1. However, we must keep this in mind with a caveat: although you are looking to get as close as you can to 1, the closer you get to 1, the more suspicious you should be of your model. For instance, the results of the KNN model are as follows:

Figure 2.4 – KNN model results

Though it may seem counterintuitive, getting this high a score likely means that our model is not working well at all, or that it’s working especially well on the training data but won’t perform as well on new datasets. While it may seem paradoxical, though the model is trying to get as close as it can to 1, getting too close is quite suspicious. That’s because we always expect a model will be imperfect – there will always be some loss. When models perform exceedingly well with training data and get high scores, it could just mean that the model was calibrated to that data sample and that it won’t perform as well with a new data sample.

This phenomenon is called overfitting and it’s a big topic of conversation in data science and ML circles. The reason for this is that, fundamentally, all models are flawed and are not to be trusted until you’ve done your due diligence in selecting the best model. This game of choosing the right model, training it, and releasing it into the wild must be done under intense supervision. This is especially true if you’re charging for a product or service and attempting to win the confidence of customers who will be vouching for you and your products someday. If you’re an AI/ML product manager, you should look for good performance that gets better and better incrementally with time, and you should be highly suspicious of excellent model performance from the get-go. I’ve had an experience where model performance during training was taken for granted and it wasn’t until we had already sold a contract to a client company that we realized the model performed terribly when applied to the client’s real-world data. As a result, we had to go back to the drawing board and retrain a new model to get the performance we were looking for before deploying that model into our client’s workflows.

A quick note on neural networks: while training generative AI models will be a bit different considering the subject matter and purpose of your model, it will follow a similar process. You’re still going to put a premium on a clean and diverse data sample, you’re still going to be thoughtful about which neural network will work best for the performance you want, and you’re still going to need to account for (and optimize on) your loss function to the best of your ability. This process will continue through various loops of training and validating until you feel confident enough that your generative AI model will be able to generate new outputs based on the training examples you’ve given it. Your goal of tweaking hyperparameters for performance, minimizing loss where you can, and amassing enough data to set your model up for success remains the same as it does for other ML models.

Once you have comprehensive, representative data that you’re training your models on, and you’ve trained those models enough times and adjusted those models accordingly to get the performance you’re seeking (and promising to customers), you’re ready to move forward!

AI Product Manager's Handbook was published in November 2024.

Get the eBook for $39.99 $27.98

And that’s a wrap.

We have an entire range of newsletters with focused content for tech pros. Subscribe to the ones you find the most useful here. The complete PythonPro archives can be found here.

If you have any suggestions or feedback, or would like us to find you a Python learning resource on a particular subject, just respond to this email! See you next year!